AI Killed The College Paper. So, What's Next?

It's time to grade the workflow (not the output).

Thanks for subscribing to SatPost.

Today, we’ll talk about the wave of AI cheating for college papers and essays (and a potential solution).

Also this week:

HBO Max’s Hilarious Rebrand

How China Captured Apple

…and them wild posts (including Elizabeth Holmes)

Last week, New York Magazine dropped a nuclear viral article written by James D. Walsh. It was titled “Everyone Is Cheating Their Way Through College” with a guaranteed-to-make-you-click subtitle “ChatGPT has unraveled the entire academic project”.

The piece just nailed the Venn Diagram of topics that will trigger people in 2025: 1) the state of higher education; 2) an ethically-questionable AI use case; 3) anxiety with the younger generations; 4) cheating; and 5) oh god, “ChatGPT”.

Here are some doozy excerpts from Walsh:

As a computer-science major, [the student] depended on AI for his introductory programming classes: “I’d just dump the prompt into ChatGPT and hand in whatever it spat out.” By his rough math, AI wrote 80 percent of every essay he turned in. “At the end, I’d put on the finishing touches. I’d just insert 20 percent of my humanity, my voice, into it.”

Got damn! Ok, next:

I asked Wendy if I could read the paper she turned in, and when I opened the document, I was surprised to see the topic: critical pedagogy, the philosophy of education pioneered by Paulo Freire. The philosophy examines the influence of social and political forces on learning and classroom dynamics. Her opening line: “To what extent is schooling hindering students’ cognitive ability to think critically?” Later, I asked Wendy if she recognized the irony in using AI to write not just a paper on critical pedagogy but one that argues learning is what “makes us truly human.” She wasn’t sure what to make of the question. “I use AI a lot. Like, every day,” she said. “And I do believe it could take away that critical-thinking part. But it’s just — now that we rely on it, we can’t really imagine living without it.”

This next one goes hard:

After getting acquainted with the chatbot, Sarah used it for all her classes: Indigenous studies, law, English, and a “hippie farming class” called Green Industries. “My grades were amazing,” she said. “It changed my life.” Sarah continued to use AI when she started college this past fall. Why wouldn’t she? Rarely did she sit in class and not see other students’ laptops open to ChatGPT. Toward the end of the semester, she began to think she might be dependent on the website. She already considered herself addicted to TikTok, Instagram, Snapchat, and Reddit, where she writes under the username maybeimnotsmart. “I spend so much time on TikTok,” she said. “Hours and hours, until my eyes start hurting, which makes it hard to plan and do my schoolwork. With ChatGPT, I can write an essay in two hours that normally takes 12.”

The teachers are trying to battle back…

Teachers have tried AI-proofing assignments, returning to Blue Books or switching to oral exams. Brian Patrick Green, a tech-ethics scholar at Santa Clara University, immediately stopped assigning essays after he tried ChatGPT for the first time. Less than three months later, teaching a course called Ethics and Artificial Intelligence, he figured a low-stakes reading reflection would be safe — surely no one would dare use ChatGPT to write something personal. But one of his students turned in a reflection with robotic language and awkward phrasing that Green knew was AI-generated. A philosophy professor across the country at the University of Arkansas at Little Rock caught students in her Ethics and Technology class using AI to respond to the prompt “Briefly introduce yourself and say what you’re hoping to get out of this class.”

…but it’s a Sisyphean task:

Still, while professors may think they are good at detecting AI-generated writing, studies have found they’re actually not. One, published in June 2024, used fake student profiles to slip 100 percent AI-generated work into professors’ grading piles at a U.K. university. The professors failed to flag 97 percent. It doesn’t help that since ChatGPT’s launch, AI’s capacity to write human-sounding essays has only gotten better.

Which is why universities have enlisted AI detectors like Turnitin, which uses AI to recognize patterns in AI-generated text. After evaluating a block of text, detectors provide a percentage score that indicates the alleged likelihood it was AI-generated. Students talk about professors who are rumored to have certain thresholds (25 percent, say) above which an essay might be flagged as an honor-code violation. But I couldn’t find a single professor — at large state schools or small private schools, elite or otherwise — who admitted to enforcing such a policy.

Most seemed resigned to the belief that AI detectors don’t work. It’s true that different AI detectors have vastly different success rates, and there is a lot of conflicting data. While some claim to have less than a one percent false-positive rate, studies have shown they trigger more false positives for essays written by neurodivergent students and students who speak English as a second language.

Turnitin’s chief product officer, Annie Chechitelli, told me that the product is tuned to err on the side of caution, more inclined to trigger a false negative than a false positive so that teachers don’t wrongly accuse students of plagiarism. I fed Wendy’s essay through a free AI detector, ZeroGPT, and it came back as 11.74 AI-generated, which seemed low given that AI, at the very least, had generated her central arguments. I then fed a chunk of text from the Book of Genesis into ZeroGPT and it came back as 93.33 percent AI-generated.

Grim. Very grim.

Of course, every generation goes through some similar education-related concern. Socrates famously worried that the act of writing was detrimental to memory and therefore thinking. In more recent decades, various inventions —from calculators to spell check to Google to Wikipedia to Microsoft’s Clippy — have caused consternation over how students will learn critical thinking skills.

Generative AI is a totally different ball game than these previous scares, though.

Writing and submitting papers has underpinned the entire college project for over a thousand years. Now, a B-quality paper is a single-prompt and 5 seconds away.

What’s the solution? I’m the 6,556,987,999th most qualified person in the world to answer this question, so let start by sharing one statement and one memory:

The statement: The essay/paper assignment as a pedagogical tool is over. It’s cooked. It’s done for. There is a pre- and post-ChatGPT history for the essay as an student-grading device. Hat tip for lasting so long and we should all celebrate by framing our high school narrative analysis of The Great Gatsby (you should also frame it because it’s the 100th anniversary of Fitzgerald’s classic).

The memory: I graduated university with a history degree as a solid B- student. I did so by mostly taking Wikipedia articles, going through the reference section, borrowing books from the library based on those references and then triangulating all of my non-original thoughts into a 5-page essay of double-spaced 12 font (sometimes 13 font with wider indents if I needed to make it look longer).

Because of #2, I’m not super judgmental of college students using ChatGPT to power through their assignments. However, because of #1, I am pretty pessimistic on the academic project if the schools don’t figure out a way to integrate generative AI into the curriculum.

Everyone knows that college/university is a bundle of goods including:

critical thinking development

knowledge attainment

job market signalling

finding new friends and dating people

networking

light or heavy indoctrination (depending on the school)

a taste of independent living…

…and also young adult babysitting

playing Super Smash Bros. on N64 with the homies

Status

making my Asian parents happy

dick around with no real responsibilities

social and communication skills

eating poutine at 4am after getting absolutely gassed for Peel Pub’s $5 pitcher special on Tuesdays

As schools are presently constituted, Generative AI really threatens the first three goals (critical thinking development, knowledge attainment, job market signalling).

Take my sub-par University career as an example. The reality is that my real learning took place by reading random books on my own or as Mark Twain said “Never let school interfere with your education.”

Still, going through the Wikipedia motions had elements of knowledge attainment and some skill being exercised. I at least had to go through the work of writing 5 pages-ish of words — depending on the font — and finding random quotes from physical books that weren’t just a straight up copy-and-paste from the internet. Also, the fact that I could complete those tasks were the type of skills that signalled to potential employers that I could put in the effort to do a job even if it was fake laptop work….which was basically the first decade of my professional career.

All of that is short-circuited by ChatGPT because teachers will (understandably) have the default assumption that a take-home essay was done by AI. Whereas, employers won’t trust the “grading” system…which itself already suffers from widespread grade inflation.

So, what do colleges and universities have to do to force critical thinking and rebuild a signal? They need to incentivize different outcomes.

Definitely go back to oral and Blue Book exams. But let’s also accept the reality that generative AI isn’t going anywhere. Let’s accept the reality that the entire next generation is interacting with these tools on a daily basis.

If we accept that reality, then this suggestion by neuroscientist Erik Hoel — to grade the workflow instead of the output — makes a lot of sense and can be deployed in a reasonable timeframe:

Why, in 2025, are we grading outputs, instead of workflows?

We have the technology. Google Docs is free, and many other text editors track version histories as well. Specific programs could even be provided by the university itself. Tell students you track their workflows and have them do the assignments with that in mind. In fact, for projects where ethical AI is encouraged as a research assistant, editor, and smart-wall to bounce ideas off of, have that be directly integrated too. Get the entire conversation between the AI and the student that results in the paper or homework. Give less weight to the final product—because forevermore, those will be at minimum A- material—and more to the amount of effort and originality the student put into arriving at it.

In other words, grading needs to transition to “showing your work,” and that includes essay writing. Real serious pedagogy must become entirely about the process. Tracking the impact of education by grading outputs is no longer a thing, ever again. It was a good 3,000 year run. We had fun. It’s over. Stop grading essays, and start grading the creation of the essay. Same goes for everything else.

Admittedly, I wasn’t sold on Hoel’s proposal at first glance.

I totally understand there are many ways to learn (hands on, talking, visual etc) but am firmly in the camp that writing is the best way to force critical thinking. As a newsletter purveyor, I know I’m talking my own book but would say the same thing even if you waterboarded me.

YC co-founder Paul Graham has a great article titled “Write or Write Not”, where he posits exactly a future in which more and more people over-rely on AI:

I'm usually reluctant to make predictions about technology, but I feel fairly confident about this one: in a couple decades there won't be many people who can write. […]

The reason so many people have trouble writing is that it's fundamentally difficult. To write well you have to think clearly, and thinking clearly is hard.

And yet writing pervades many jobs, and the more prestigious the job, the more writing it tends to require. […][Due to AI], all pressure to write has dissipated. You can have AI do it for you, both in school and at work.

The result will be a world divided into writes and write-nots. There will still be some people who can write. Some of us like it. But the middle ground between those who are good at writing and those who can't write at all will disappear. Instead of good writers, ok writers, and people who can't write, there will just be good writers and people who can't write.

Graham says this is a different situations than previous lost skills (eg. blacksmiths) because “writing is thinking”. It’s a much more generalized problem.

With respect to colleges and universities, I do think it’s very very bad that they can no longer force students to sit down and write stuff (or even read stuff).

But after digesting Hoel’s point, I realized that grading the workflows is a decent alternative because the AI chat interface is writing. ChatGPT and its competitors are incredibly powerful conversational partners intermediated through text. It may not be “5-page essay with 12 font” type of writing but it is writing that is natural to a younger generation. Hell, Socrates would recognize the ChatGPT conversation as closer to the oral tradition of learning and his Socratic method than the “5-page essay with 12.5 font”.

I also think there are many advantages for the AI chat route as a pedagogical tool. Think about how often we text via SMS and group chats ever day. It’s very natural, especially compared to a blank page with blinking cursor. Compared to asking a human, there’s no concerns about asking "stupid" questions. A major part of learning is staying motivated and engaged. If that means a chat interface, then so be it.

To be extra extra extra clear, I’m talking specifically about writing workflows over outputs in the context of higher education. I’m long past having to write for school and will only ever publish my own words with my idiotic own jokes. People should absolutely be writing on their own on topics they are interested in all the time. Writing forces you to wrestle with an idea like nothing else. Write for yourself. In a journal or a blog or online or in group chats or in email chains or in random Amazon reviews for Banana slicers. Always be reading, writing and thinking.

In a recent interview, OpenAI CEO Sam Altman explained how he saw different age demographics using ChatGPT:

It reminds me of when the smartphone came out and every kid was able to use it super well. Older people took like three years to figure out how to do basic stuff. […]

The generational divide on AI tools right now is crazy. […]

I think [this is a] gross oversimplification but older people use ChatGPT as a Google replacement. Maybe people in their 20s and 30s use it as like a life advisor. Then, people in college use it as an operating system.

Even if you want to discount Altman’s take for inherent bias, it feels directionally correct. With AI chatbots, I think it makes sense to meet the students where they are at.

Ultimately, the higher education bundle we talked about earlier is supposed to prepare students for the real world. Guess what? Knowing how to use AI and how to manipulate the correct models and how to ask the right questions and how make the right prompts is what will be valuable in coming decades. I find a lot of credence in the popular line that “AI won’t take your job, but someone who knows how to use AI will.”

So, grade the workflow (and not the output). Let’s just keep some form of writing to force critical thinking and make sure there’s a signal for future employers.

Is grading the workflow actually worse than grading Trung Phan on Wikipedia? It might not be the most ideal situation but we have to be realistic about the technology. It’s definitely better than grading a “5-page essay with 13 font” that was 98.5% done by AI. The teacher can also train his or her requirements into an AI and have it mark the workflows, thus freeing up time for more hands-on teaching. Again, is that any worse than a teacher reading 100 papers on The Great Gatsby that are all +/-2% derivations of Coles Notes?

Honestly, smartphone and all the apps as a distraction machine have probably done more damage to kid’s ability to focus and learn than AI ever will. Especially if a workflow standard is implemented.

“Ok, Trung, will you let your kid use AI?”

Let me first say that I’m not giving my son a smartphone until he’s at least 15. However, we already allow him to use a laptop for design work and to research for topics he’s curious on.

On the few occasions he’s dabbled with AI chat, I’ve applied the same rules I do for general screen time. I just limit the usage and only allow him to interact with supervision. Make sure he’s not getting the sycophantic model update and teach him how to cross-check the facts. It’s an intellectual sparring partner not a “you don’t have to ever do any cognitive work” partner.

If you’ve ever played the “why game” with your kid — as in they ask “why?” 10x in a row on some random topic and you tap out after the first three questions — then you’ll know that ChatGPT is a high-quality learning tool in the right context.

Part of that context is availability and I’ve borrowed a concept called “Best Available Human” from Wharton Professor Ethan Mollick as a decent principle :

I would like to propose a pragmatic way to consider when AI might be helpful, called Best Available Human (BAH) standard. The standard asks the following question: would the best available AI in a particular moment, in a particular place, do a better job solving a problem than the best available human that is actually able to help in a particular situation?

I also want my kid to know how to use AI because there is obvious upside since the tool is flexible enough to meld with any student’s specific needs. Per Nature, a recent “meta-analysis of 51 research studies published between November 2022 and February 2025…indicate that ChatGPT has a large positive impact on improving learning performance”. To be sure, the classroom results required proper integration of the tool as an intelligent tutor and learning partner.

This classroom integration is still a work-in-progress but it will come.

There’s a long history of the first use case of new technologies missing the mark. This trend plays on the idea of skeuomorphism, when an older design cue is used to make something new feel familiar:

Electricity was first adopted in factories in the early 20th century…by replacing centralized steam engines that ran the entire length of a building. Eventually, factory owners realized there didn’t have to be a centralized electric-powered motor. They could actually build entire new factories and workflows because electricity could operate in more flexible and modular floor plans.

Automobiles were initially conceived as “horseless carriages”…and featured whip holders instead of steering wheels (and it took time for the dashboard and pedals to be integrated).

Early television was filmed like stage plays…directors used single cameras on a small set and the scripts were still very dialogue heavy (close-ups, camera movements, editing and more visual language came after).

Steve Jobs thought the killer app for the first iPhone…was a better phone call experience. He specifically pointed at a swipe-able contact list as the breakthrough. The iPhone didn’t even launch with 3rd party apps. Eventually, the iPhone’s “computer in your pocket”, GPS, contact list and always-on internet led to actual killer apps including social media (Facebook), transportation (Uber), convenient payments (Apple Pay), navigation (Maps) and on-demand single bags of Doritos when you’re too lazy to go to 7-Eleven (Instacart).

The first smartwatches tried shrinking smartphone UI onto the wrist…before integrating more native features such as quick glances, haptics, and passive health tracking.

Once we figure out how to properly use AI in education, my friend Jack Butcher makes a fair point that the flexibility and ease-of-use will “raise the floor of education”.

This doesn’t mean 10 hours in front of a screen, but it also doesn’t mean 0 hours.

I ultimately want my son curious, engaged and motivated on his own. That’s the best defense against a sclerotic education system (“Never let school interfere with your education.”)

Let’s get back to the colleges and universities. Confidence in the value of higher education was declining sharply even before the launch of ChatGPT. Nearly 3 years later, how have these institutions responded? It doesn’t look like they’ve done much.

There has to be way more imagination…especially with some of these insane tuition prices. I don’t care how much Super Bros someone gets to play with the homies, that alone is not worth $50k+ a year.

The college essay is no longer the right teaching vehicle in a world of ChatGPT. It simply won’t survive AI chatbots but that doesn’t mean the entire academic project is doomed. But schools have to immediately adjust to the potential of generative AI. If they don’t, you know New York Magazine will be licking their chops for future articles.

***

UPDATE (05/18/2025): I received a lot of reader responses to this piece and wanted to share one from cognitive psychologist Christoph Hügle:

Dear Trung,

I'm a longtime subscriber and first of all, thanks for your great work! I look forward to your newsletter every Saturday!

I have a few thoughts on this week's main article. I'm a cognitive psychologist, so it's a rare occasion that I know something about the main article's topic :D

For me, students cheating on their essays with AI really is about one question: When are essays a good assessment of student learning to begin with?

In general, you should assess students on what you taught them and expect them to be able to do by the end of the course (ie. the learning outcomes). Don't create an exam or essay assignment just to have something to grade your students on. Teaching and learning activities, assessment, and learning outcomes should fit together. (That's called constructive alignment.)

Let's say your assessment is an essay. They test for several things: The ability to research, write, self-organize and manage your time, as well as your subject knowledge.

So my questions to teachers would be: Did you teach your students to write essays? If not, why do you grade essay-writing ability in the final assessment, which is supposed to be about knowledge and skills gained in your class? And if you want your students to write good essays, why is an AI-assisted essay bad if it leads to better papers? Or do you really want to measure something else, like subject matter knowledge? Then a well constructed exam is the way to go.

While I agree with the importance of thinking and writing clearly, it seems to me that essay assignments are done more out of tradition and convenience than because the teacher thought hard about how best to assess learning outcomes. If you want to test for writing ability, you can do that in an on-site exam as well. Have your students research a topic or study the material beforehand (they can use AI for that), then have them write an essay in a 2-3 hours exam. (Law exams in Germany are like that, for example.) If you want to test for knowledge, however, use a written or oral test with many questions to cover all content.

Now, the idea of grading the workflow is interesting, but it immediately raises so many questions. Are you teaching your students a specific (AI) workflow you want them to demonstrate? How do you decide what's a good workflow? Getting fast and efficient from the first prompt to the final essay, or taking longer, querying the AI, and learning things along the way? Wouldn't it be enough for one class each year to assess this ability?

For me, that's just creating another way to grade students without aligning it to what you actually teach.I would ditch the essays except for essay questions in tests. Instead, I'd have students complete projects like conducting a study: Researching the literature, creating a research question, conducting experiments, writing it all up, presenting the results etc. This is much closer to what you're supposed to learn in uni and do after you graduate. Also, students could work together with AI since they'd still be critical for conducting the actual study. Other projects could be students tackling real-world problems by using their new knowledge and skills.

I studied in the Netherlands, and the Dutch had this figured out really well. Assessments were either traditional exams, open-book exams with applied questions, real-world projects with presentations, or mini-studies you had to run. In other words, this can also be an opportunity for universities to raise the quality of their teaching.

That's all - have a great week!

Best

Christoph

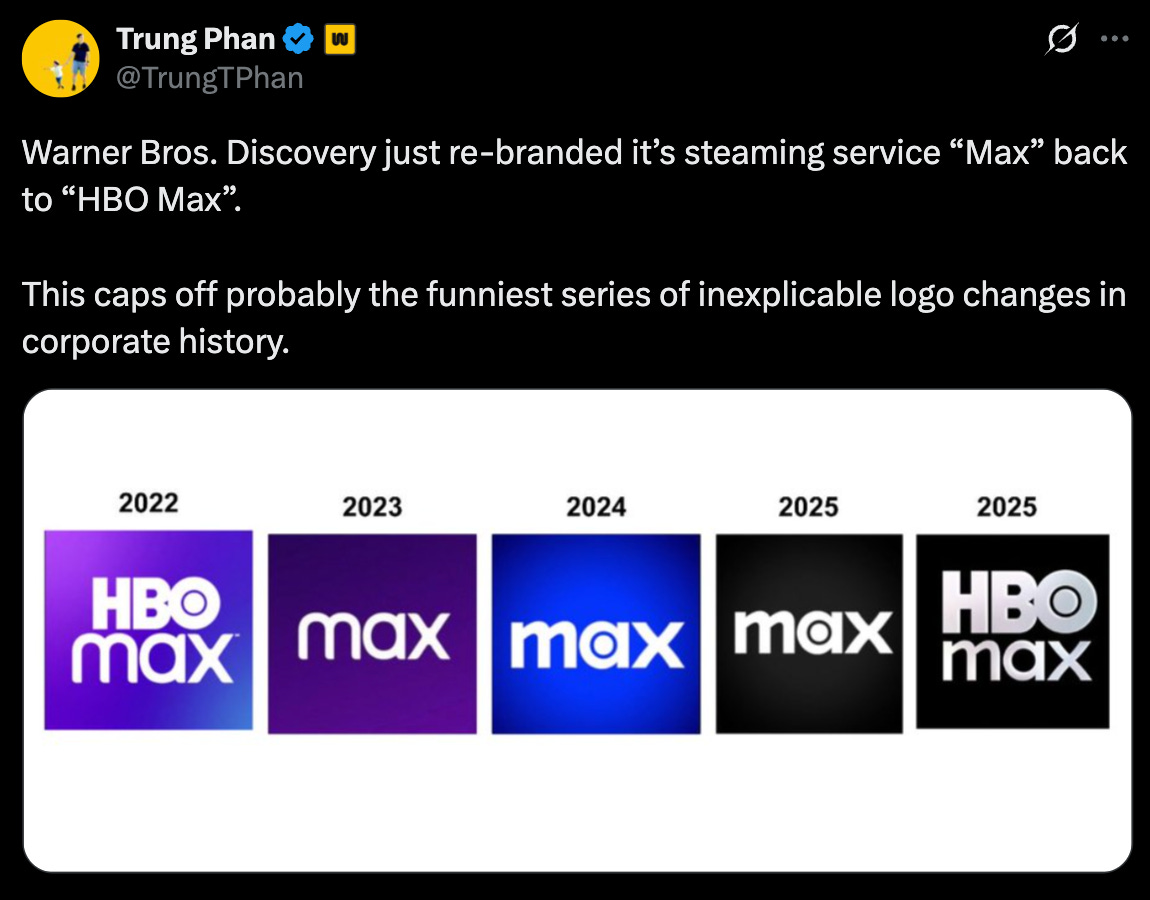

HBO Max’s Hilarious Rebrand

So, back in 2023, Warner Bros. Discovery did one of the most head-scratching rebrands ever. Looking to compete with the major streaming services (Netflix, Amazon Prime, Apple TV Plus, Disney+ etc.), it changed the name of its streaming app from “HBO Max” to “Max”. This is on top of the previous existence of the HBO Go and HBO Now apps. Very confusing, I know.

If you squint your eyes, the logic made some sense. Netflix is the Walmart of streaming, it offers something for everyone. Similarly, Warner Bros. Discovery was combining the most prestige of prestige TV from HBO (The Wire, Sopranos, Band of Brothers, Succession, Six Feet Under, Silicon Valley, Deadwood, The White Lotus etc.) with the incredibly popular — and often lower brow — reality shows from Discovery (90 Day Fiancee, Sister Wives, Gold Rush, House Hunters, Fixer Upper etc).

Max as a brand and name did cast a wider net and the streamer has locked in over 100 million subs. But it’s very very expensive to play with Netflix, Disney+ and Big Tech.

The change from “Max” back to “HBO Max” is probably a short term recognition that the service is actually more niche. Warner Bro. should aggressively license its content — which is what Sony does (it’s an “arms dealer” of films and TV) — instead of trying to be the new Netflix. Longer term, it does look like Warner Bros wants to bring back the HBO brand halo so it can sell to Apple for $10B+ (pure speculation but that’s what we’re here for).

PS. The HBO Max social media team is doing incredible work rolling with the punches on these ridiculous logo changes … seriously great work.

PPS. Mercifully, Disney released an ESPN streaming app and kept it simple. The app is called …wait for it…ESPN…and it’ll cost $29.99 or $299 a year (or $34.99 a month bundled with Disney+).

This issue is brought to you by Liona AI

Launch a GPT Wrapper in Minutes

As many of you SatPost readers may know, I’ve been building a research app for the past few years (Bearly AI). The app required a flexible backend to manage all of the LLM APIs.

It’s been so useful that we turned the backend into a product called Liona AI.

I wanted to call it Liona Max HBO Max but my co-founder said “bro, chill out” and that was that.

Anyway, this easy-to-use platform lets your users and teams connect directly to OpenAI, Anthropic, Grok, Gemini, Llama, Cursor and more while you maintain complete control over security, billing, and usage limits.

How China Captured Apple

Last week, I wrote about “Warren Buffett’s $160B+ Bet on Apple” (aka the best tech trade ever probably maybe).

Let me share two follow-ups on that piece.

First, I have never received so many replies in my life about a single typo. Hilariously, it had nothing to do with Buffett, Berkshire or Apple. It had to do with me incorrectly identifying the winner of the 1986 Master’s as Jack Nicholson (the actor) rather than Jack Nicklaus (the iconic golfer). Sorry but also, the two legends SHARE THE SAME FIRST SEVEN LETTER OF THEIR FULL NAMES. It was an honest and easy-to-make mistake. SatPost readers are apparently huge golf fans.

Second, the Sunday Time dropped an absolute doozy of a piece about how Apple’s investment in China over the past decade was hugely responsible for creating a geopolitical peer rival to America.

It's an excerpt from “Apple in China: The Capture of the World's Greatest Company” by Patrick McGee:

As [Apple executive in China Doug] Guthrie studied Apple’s operations, a common theme emerged in talks with dozens of suppliers. “Working with Apple is really f***ing hard,” they’d tell him. “So don’t,” he’d respond. And they would demur: “We can’t. We learn so much.”

By 2014, Apple was so frequently sending America’s best engineers to China — what one Apple veteran calls “an influx of the smartest of the smart people” — that the company convinced United Airlines to fly 6,857 miles from San Francisco to Chengdu three times a week, pledging to buy enough first-class seats to make it profitable.

Guthrie quickly realised this wasn’t the industry norm. Although suppliers resented the intense pressure and the soul-crushingly low margins offered by Apple, they put up with it because they derived something far more valuable than profits. The deal — let’s call it the Apple Squeeze — was that Apple would exert enormous power over its suppliers multiple hours a day, for weeks and months leading up to a product launch, and in return, the suppliers would absorb cutting-edge techniques. [...]

Guthrie began to realise that Apple, however inadvertently, was operating in ways that were immensely supportive of Beijing’s “indigenous innovation” directive. Chinese officials just didn’t know it because the company was so secretive about how it developed its products.

As one former industrial designer put it: “I don’t remember, ever, a strategic withholding of information. All we cared about was making the most immaculate thing Every day you’d invent your way through a problem. It was absolutely wonderful as an experience. But I guess we were unwittingly tooling them up with incredible knowledge — incredible know-how and experience.”

As Apple came under political pressure to “give back” to China, Guthrie advocated that the company change tack. “China wants the constant learning,” he told colleagues. “The fact that Apple helps bring up 1,600 suppliers for China — it’s an incredible benefit.”

When staffers added up Apple’s investments in China — mainly the salaries and training costs of three million workers in the supply chain, as well as sophisticated equipment for hundreds of production lines — they realised the company was contributing $55 billion a year to China by 2015: a nation-building sum.

Honestly, kind of disturbing. Why? When I’m on a United Airlines flight and want an extra bag of pretzels or one of those shitty airline headphones that last for 1/4 a film before breaking, they tell me it’s $7.50. When Apple is flying United Airlines to Asia and doesn’t want to make a connecting flight, they ask for a special route between two unprofitable cities and United Airlines bends over backwards to make it happen. Ugh.

McGee calculates that the $55B a year investment Apple made in China was 2x the annual spend that America made in Europe during the post-WWII Marshall Plan reconstruction (on an inflation-adjusted basis).

Here’s a crazy stat: since the iPhone was launched, Apple has trained over 28 million Chinese workers on high-tech manufacturing. That’s more workers than the entire state of California and a key reason why China leads in so many next-gen industries (EVs, solar, drones, robotics).

A key point that McGee makes is that there was no real alternative to China for the type of scale that Apple required. In 2007, Apple sold 1m+ iPhones. By 2012, they were moving 100m+ iPhones. China was the only country that could scale with Apple’s requirements on precision, speed and a flexible workforce.

In an interview with Bari Weiss on the Honestly podcast, McGee makes an analogy: imagine 500,000 people were dropped into Boston to work on the iPhone, then the next week that group of 500,000 people went to work on another electronic project in Milwaukee. That’s basically the type of labour flexibility that China brought to the table.

Steve Jobs had tried to make automated plants in America but the iPhone actually requires a ton of human labor (aka “millions of people screwing in tiny screws”).

As Jobs’ supply chain guru, Tim Cook started moving production to China when the iPod took off. The portable music player was initially manufactured in Taiwan but Cook soon realized only China could hit Apple’s ambitious goals. In 1999, China manufactured 0% of Apple goods but it was 90%+ a decade later.

In a 2017 interview with Fortune, Cook explained the Chinese manufacturing edge in an interview:

There's a confusion about China…the popular conception is that companies come to China because of low labor cost. I’m not sure what part of China they go to. But the truth is China stopped being the low labor cost country many years ago.

That is not the reason to come to China from a supply point of view. The reason is because of the skill and the the quantity of skill in one location. And the type of skill.

The products we do require really advanced tooling and the the precision that you have to have in tooling and working with the materials that we do are state-of-the-art. The tooling skill is very deep [in China].

In the US, you could have a meeting of tooling engineers and I'm not sure we could fill this room. In China, you could fill multiple football fields [with tooling engineers].

The vocational expertise is very very deep here. I give [China’s] education system credit for continuing to push on that even when [other countries] were deemphasizing vocational.

This video was brought to my attention by Steven Sinofsky, current investor at a16z who previously ran the Windows division at Microsoft. He shared it in the context of his experience with the PC supply chain:

The PC makers — Original Design Manufacturers [ODMs] — would say the same thing about making Apple laptops. That was the dry run for the iPhone. There would be no good Windows laptops at all had the ODMs not learned from doing the work for Apple. […]

The thing that the "we don't want to make iPhone" crowd does not understand is that this is not just "cheap labor" but companies outsourced an enormous amount of skill to China over time. [as Tim Cook says in this video…it is also quantity of that skill]. And then China moves up the value chain.

At some point this hollows out talent in US companies. Apple is an exception not the rule in how much it continued to do. For a long time Intel [did] too as it made not just chips but kept moving up the chain to integrated chipsets then motherboards. Other industries like phones, laptops, autos have outsourced the skills to even make things. The US companies became sales and marketing. "Product Development in the US” becomes “going to Asia to see what prototypes they made there".

A US PC maker says "we'd like a 14" laptop with these kinds of specs" and then China/Taiwan ODMs design the whole thing. That's why all the "Ultrabooks" (compete with MacBook laptops) looked almost exactly the same. Intel prototyped them with Asia ODMs and then the ODMs tweaked them. PC makers did little of the engineering.

In McGee’s estimation, it was fully logical for Apple to bet on China…up until 2013. Within a week of President Xi taking power, there was an aggressive anti-Apple campaign. The Chinese government and media went on a “blitz” saying that Apple was taking advantage of the country and taking all the margin from the manufacturers.

In hindsight, Apple’s leadership should have realized how beholden they were to the Chinese government. Instead of building resiliency in its business, Cook and Apple double-downed on the country with those Marshall Plan scale investments.

There’s a world where Apple took a decade of profits and — instead of spending $700B on buybacks — started building out a back-up supply chain. Now, China completely has it over a barrel (note: Apple's responded to say that some of the book's reporting is inaccurate but wasn't clear which part it disagreed with).

Apple is making pronouncements that it’s moving manufacturing to India and Vietnam. In fact, it says 1/4 of its iPhones in India in a few years. But the reality is that Apple is mostly “assembling” its products in those countries. Most of the manufacturing of key components and related supply chains are very much still in China. Not to mention that fact that Trump specifically criticized Cook’s plan for more work in India instead of America.

India clearly has the manpower potential but infrastructure is lagging and its government is not nearly as centralized as the CCP (which make it much easier to run an national industrial policy). Further, the risk Apple faces is that if it moves too much manufacturing away from China too fast, the Chinese government could seriously hamper it’s existing manufacturing business as well as its retail operations (China is one of Apple’s largest consumer markets). Even with a 90-day pause in the current US-China trade war, we are all digesting potential tail risks…with a hot war hanging over everything.

It’s a huge manufacturing pickle. It’s also an App Store monopoly pickle and Apple Vision Pro/AI flop pickle. Looking back, Apple kind of sleep-walked into all of these pickles…which I guess is easy to do while you’re making the insane profits it was making. I won’t say pickle again but will let you know that 2027 is a huge year for Apple. On the 20th anniversary of the iPhone, The Verge says Apple is planning to release a “mostly all glass” iPhone as well as the first foldable iPhone, first smart-glass competitor to Meta Raybans and camera-enable AirPods and Watches.

Somewhat ironically, there is no one else in the entire world as qualified to deal with Apple’s supply chain challenge. He could still right the ship for the long term.

However, a cautionary tale that McGee brings up is Jack Welch and General Electric. For decades, Welch was seen as America’s greatest CEO, mastering capitalism to briefly make GE the most valuable company in the world. But it turned out Welch was stripping away GE’s resilience and totally financializing the company to the point it blew up during the Great Financial Crisis.

In a few decades, will we view Cook’s stewardship of Apple in the same light? He squeezed out every efficiency for the next dollar and took the market cap from $300B to $3T. But at what cost?

Sent from Trung’s iPhone

Links and Memes

21 Observations Watching People…a very good listicle from a wedding painter Shani Zhang, who is really really good at reading body language and social tics. My wife read it and says that Shani’s 4th observation reminded her of me…ummm…ok:

“4. I watch the person with the loudest laugh. The most striking thing isn't the volume—it's the feverish pitch. As the night goes on, it begins to sound more like desperation. Their joy has a fraying quality; it is exhausting to carry because it comes with a desire to seem happy and make others happy at all times.”

For real, though…Shani ain’t pulling punches:

“11. It is easy to spot the person in the room who thinks they are better than everyone. It is the person uninterested in giving any of their attention, the genuine and open-ended kind, to anyone else. This is also painful to see, because they often cannot see their own misery, how unpleasant the world is if no one is good enough to be loved.”

***

What does defense tech firm Palantir actually do? This question that has turned into a running meme joke but was expertly answered by Nabeel Qureshi on Lenny Ratchinsky’s podcast. Nabeel worked at Palantir for 8 years and explains how the company just perfected the method to wrangle data — clean it, standardize it, make it searchable and manipulate-able — within large corporate and government organizations. This data is massively valuable in the AI age:

“Because one lens through which you can view [Palantir] is they spent 20 years basically building the mother of all data foundations for every important institution in the world.

And guess what, that's very valuable now that AI models are out of proprietary data that isn't public. Suddenly you have access to that and you are in a very privileged position to help your customers deploy AI in a way that makes them successful and that solves real business problems.”

Another corporation that often gets the “what do they actually do” question is Salesforce. In fact, I’ve heard Palantir described as “Salesforce for killing people”. But now that we’ve clarified what Palantir does, Salesforce insiders need to spill the beans.

***

Airbnb has revamped its app…to facilitate more services (ability to book chefs, trainers, and stylists) on top of the room bookings. Wired has a good profile on CEO Brian Chesky and the change. He is looking for a way to increase the TAM for the $85B vacation company — users only use the platform 2x a year — and has ambitions to turn a Airbnb login into a digital passport. Feels like a tall order tbh. These type of services are fragmented across Craigslist, Google, Yelp, Angi, Upwork and Taskrabbit. But as Netscape founder Jim Barksdale said, “There are only two ways to make money in business: One is to bundle; the other is unbundle.”

Ben Thompson is quite skeptical on the Airbnb play:

“The fact that end users only use Airbnb once or twice a year is precisely what makes the entire service work; again, Airbnb matches up hosts who need their properties occupied as often as possible with travelers who only need a property once-or-twice a year — solving that mismatch is the foundation of the entire business, and is well worth Airbnb’s fee.

The need fundamentally changes when it comes to day-to-day life in your hometown: yes, users need help in finding service providers, and yes, service providers need help in finding customers. The goal for both, however, is to establish long-term relationships; once you have a hairdresser, you want to visit the same hairdresser repeatedly, not find a new one every time. And, by extension, neither you, nor the hairdresser, has any interesting in including Airbnb in every transaction forevermore. In other words, what Airbnb is proposing may end up being valuable, but it will be very hard for Airbnb to capture that value in a meaningful way.”

***

Some other baller links for your weekend consumption:

Some guy swiped 2 million times on dating apps…but only matched once. Signül explains how this is actually the norm and explains the devious way dating apps have fully monetized men and women.

Take-Two Interactive owns Grand Theft Auto…as well as the NBA 2K series. Those two franchises make up most of the value for the $40B game publisher. Back in 2023, The Business Breakdown podcast had a great…errr…breakdown on Take-Two and how it’s at risk on this over-reliance on a few titles (and also how it way way overpaid for mobile games firm Zynga).

Epic Games founder Tim Sweeney spent $100m in legal fees fighting Apple…and the iPhone maker’s App Store policies. Sweeney tells Peter Kafka that his Fortnite game also missed out on hundreds of millions in revenue as it’s been blocked from iPhones. But he believes the fight is worth it because in a world where people spend increasingly more and more time in the digital sphere, a 30% tax totally kneecaps countless business models and potential use cases.

Grok chatbot was injecting controversial South African politics takes…into completely unrelated posts. The xAI team called it an “unauthorized modification” and plans to publish all of its system prompts on Github…but it may have to do more to ensure full transparency on the model (which is what users really want after previous episodes of LLMs Gone Wild including “Woke Gemini” and “Sycophantic ChatGPT” and “That Time DeepSeek Got Mad At Me”).

Tom Cruise has been on an absolute heater…promoting Mission: Impossible – The Final Reckoning. The last real Hollywood movie star is pulling out all the stops to get people into iMax to see his latest MI film, including sharing some jaw-dropping behind-the-scene footage of him doing Cruise-y stunts only Cruise would do.

President Trump signed an executive order to lower pharma drug prices…the gist is that while America is 4% of global population, it is responsible for 50%+ of global pharma drug profits. Americans do pay a lot more for drugs and are effectively subsidizing drug usage in other countries. Trump’s order will make it so Americans get the “Most Favoured Nation” price, which is equivalent to the lowest listed price in the world. The issue is that the pharma companies may only slightly reduce American prices and raise prices everywhere else. Sales will take a hit and long-term product R&D funding will be impacted. It’s quite a tricky balancing act as explained in the University of Southern California piece.

Someone made a LegoGPT…yes LegoGPT. This is equal parts dorky and awesome. Carnegie Mellon made an AI model that takes text prompts and creates physically stable Lego structures. To ensure buildable outputs, the reseachers also created “a separate software tool that can verify physical stability using mathematical models that simulate gravity and structural forces”, per Ars Technia.

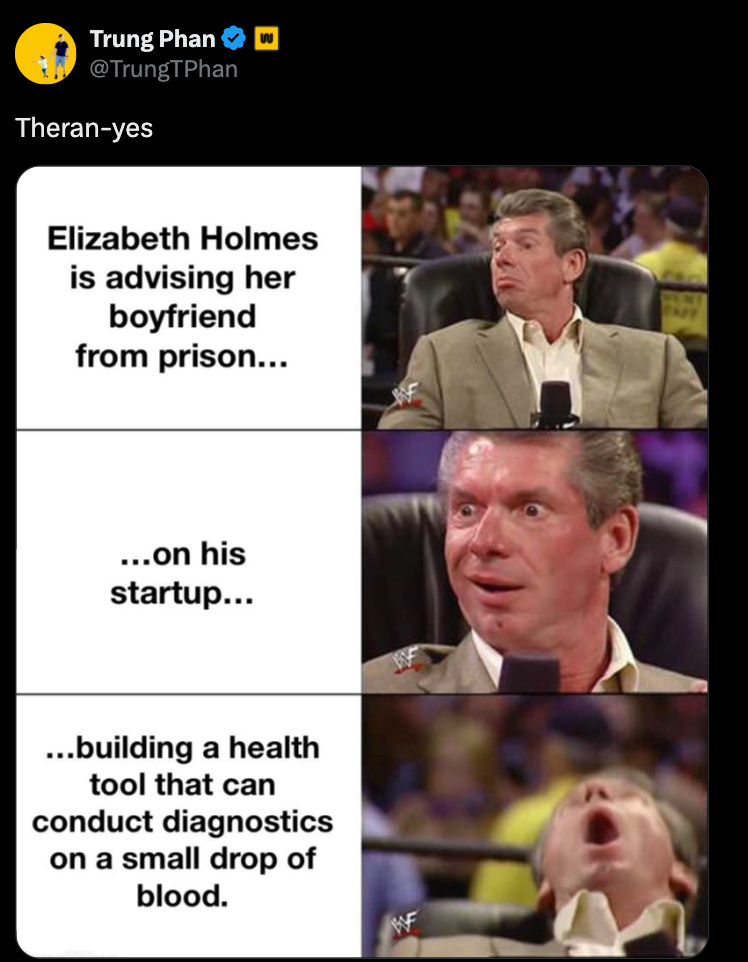

…and them wild posts including news that Elizabeth Holmes partner is launching a health startup called Haemanthus — the Greek word for “Blood Flower” — that can run diagnostics on blood. Holmes has been sharing ideas. The tool is focused on animals but will eventually target humans.

Separately, God-tier comedian Nathan Fielder has apparently been visiting Holmes in prison, which means he’s about to make the funniest piece of content in comedic history and a reminder that he once did an episode of Nathan For You where he rigged a blood test to “prove” that people had more fun while hanging around him:

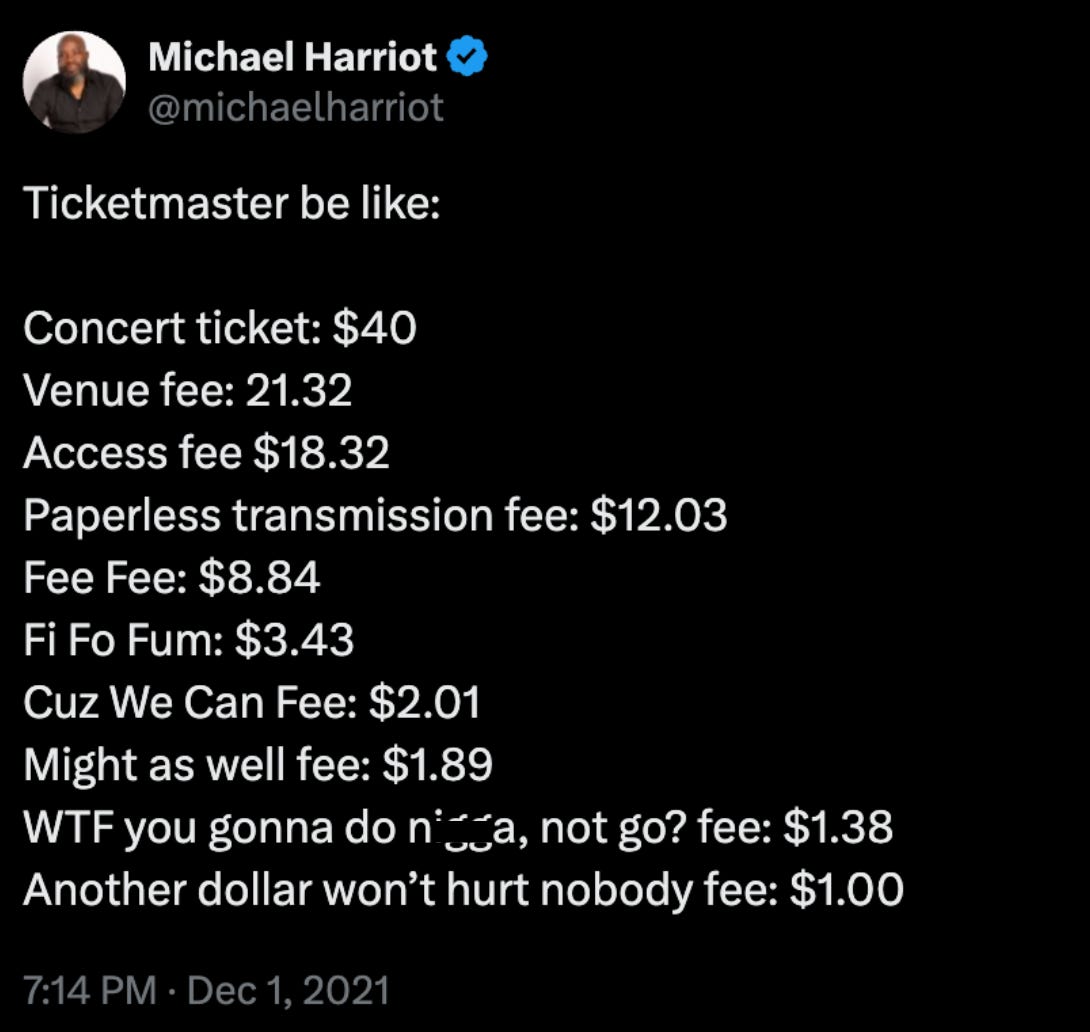

Finally, the Federal Trade Commission (FTC) put a ban on junk fees that went into effect. This change targets sketchy ticketing and hotel checkout pages. It means that the advertised prices from these companies have to include all the mandatory charges…and no more of those ridiculous surprises when you go to pay.

If you don’t know what I mean, here’s perhaps the greatest tweet ever about Ticketmaster.

Hi Trung. good stuff every week.

do you know any reason why your newsletter, while i can receive email notifications, doesnt show up on my Substack subscriptions page, along with my other subs? is that by design?

thanks

Love the Apple write up, thanks sir!