Jensen Huang, Dario Amodei and Manufacturing Intelligence at Scale

PLUS: SpaceX's Starship miracle, Taco Bell's franchise model.

Thanks for subscribing to SatPost (and welcome to all the new readers that came via Tim Ferriss’ link to my article on Francis Ford Coppola).

Today, we will talk about some recent AI news including an interesting interview with Nvidia’s CEO Jensen Huang and an important blog post from Anthropic CEO Dario Amodei.

Also this week:

SpaceX’s Starship Miracle

Why Taco Bell is a great franchisee

…and them fire posts (including microplastics)

Since the start of 2023, chipmaker Nvidia has seen its stock rise 9x.

I’ve participated in 0.000% of Nvidia’s run-up — well, not in the single stock anyways because I suck at picking stocks (but the memes have been great) — and it is now worth $3.4 trillion, a few hundred billy behind Apple as the world’s most valuable company.

Nvidia’s founder Jensen Huang owns ~3% of the company is now worth $119 billion. Jensen could buy Intel ($97B) — the semiconductor giant from the 20th century — with enough funds leftover to buy Denny’s ($340m) at least 65x over. That’s a glorious amount of Grand Slam breakfasts.

Nvidia has been the largest beneficiary of the generative AI hype cycle since the launch of ChatGPT almost two years ago.

It’s not even close.

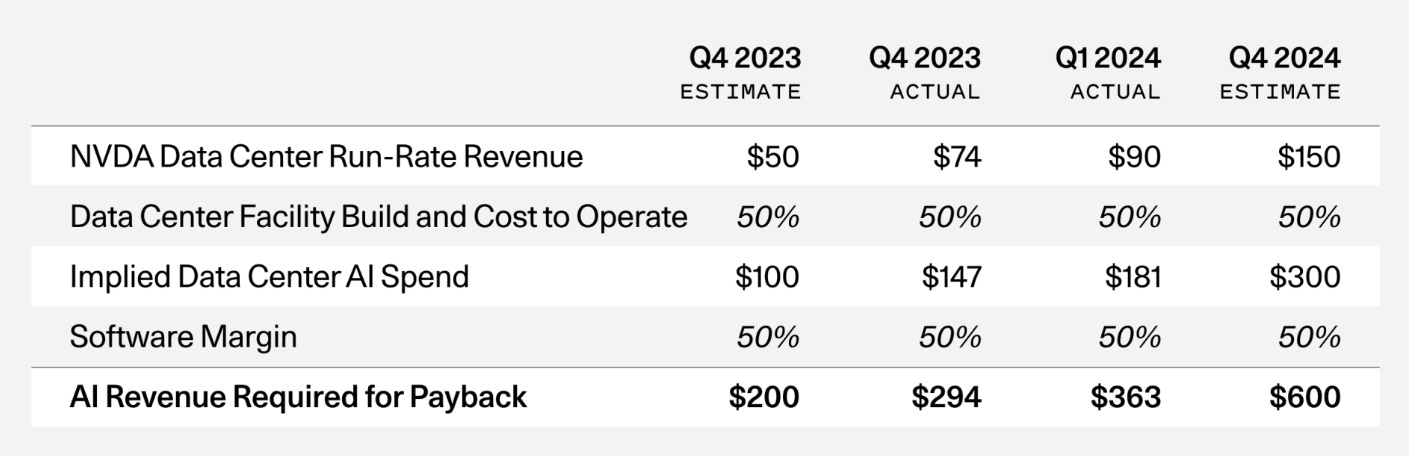

In June, Sequoia Capital investor David Cahn laid out Nvidia’s dominance in an article titled “AI’s $600B Question” with an important…errr…question: where is all the AI-related revenue from the massive capex spend that Big Tech is making in chips and data centres?

Cahn estimates that major tech players — including OpenAI, Google, Microsoft, Apple, Meta, Oracle, ByteDance, Alibaba, X, Tencent, Tesla — will spend $150B on Nvidia chips for 2024. These chips account for ~50% for the buildout of an AI data centre and the companies would need to collectively generate $600B in AI-related revenue to payback the infrastructure spend.

But, some generous napkin math showed that these tech firms may only generate $100B in AI-related revenue, leaving a “$500B hole”.

A common point about generative AI's current lack of revenue is that the technology is still in its early days.

Many smart industry players believe that “AI in 2024” is comparable to the “internet in 1999”. Notably, the internet rush wasn't just the Dotcom Bubble but also the Telecom Bubble, when $1 trillion (inflation adjusted) was spent on telecommunications infrastructure including switches, routers, submarine cables, spectrum licenses, broadband cables and more.

While both bubbles burst, that telecom buildout later enabled the rise of Facebook, Uber and Netflix. Meanwhile, older tech giants — Amazon, Google, Apple and Microsoft — rode the future mobile and cloud waves to become some of the most powerful organizations in the world.

In this analogy, Nvidia is playing the role of Cisco Systems, the networking giant that briefly became the world’s most valuable company in 2002 before seeing an ~80% drawdown.

It is against this backdrop that Jensen went on the BG2 podcast hosted by investors Bill Gurley and Brad Gerstner. Taking the grain of salt that Jensen will obviously present the most bullish case for Nvidia, it was fascinating to hear the industry leader walk through his mental model of the AI opportunity including:

AI data centres as the new unit of computing

How AI changes software

Everyone will be their own CEO of AI agents

Nvidia as a “market maker” vs. “share taker”

Let's walk through these ideas.

Nvidia and Manufacturing Intelligence at Scale

The way Jensen thinks about AI is based on what he calls “ground truths”, which are assumptions about the technology industry.

Here are two that stand out:

“Intelligence is the single most valuable commodity the world's ever known”: Based on the talk, I take Jensen to mean “intelligence” as the human cognitive ability to learn, plan, reason, delegate, solve problems and create new things.

The way to “manufacture” intelligence at scale requires GPUs: Nvidia built its business on graphics processing units (GPUs). These are in contrast to the central processing units (CPUs) made famous by Intel during the PC revolution. While CPUs excel at varied, sequential tasks (eg. general computing, spreadsheets, word processing), GPUs excel in scenarios requiring massive parallel computations (eg. graphics, machine learning and AI). Based on its GPUs, Nvidia created a new paradigm called “accelerated computing”, which is the “use of specialized hardware to dramatically speed up work, using parallel processing that bundles frequently occurring tasks.”

Keep these assumptions in mind as we walk through some of Jensen’s thoughts on the future of AI, including why data centres are the new unit of computing:

Nvidia is trying to do is build a computing platform for this new world. This machine learning world. This generative AI world. This agentic AI world.

What's so deeply profound is that after 60 years of computing, we reinvented the entire computing stack. The way you write software from programming to machine learning. The way that you process software from CPUs to GPUs. The way that the applications from software to artificial intelligence to software tools [are done]. So, every aspect of the computing stack and the technology stack has been changed.

We’re building an entire AI infrastructure and we think of that as one computer. I've said before, the data centre is now the unit of computing to me. When I think about a computer, I'm not thinking about that chip. My mental model and all the software and all the orchestration and all the machinery that's inside, that's my mental model.

These AI data centres and accelerated computing are creating a new way to write software:

Writing software is going to be different…We got all these CPUs back there. We know what it can do and what it can't do. We have a $1 trillion dollars worth of data centres [based on the CPU world] that we have to modernize.

[There’s a] trajectory over the next four or five years to modernize that old stuff…so, we have $1 trillion dollars of infrastructure over the next four or five years [that needs to be updated for an AI world].

How software is going to be used will be different. In the future, we're going to have [AI] agents. […]

I'm no longer going to program computers with C++. I'm going to program with [AI] prompting. Isn't that right? Now, this is no different than me talking to my [team] this morning. I wrote a bunch of emails before I came here. I was ‘prompting’ my team.

I would describe the context. I would describe the fundamental constraints. I would describe the mission for them. I would leave it sufficiently directional, so that they understand what I need. I want to be clear about what the outcome is but I leave enough ambiguous space for a creativity space so they can surprise me. That’s no different than how I prompt an AI.

This new way of interacting with software — from knowing how to program a computer to natural language prompting — means everyone will be their “own CEO”:

I have 60 direct reports. The reason they're on the staff is because they're world class at what they do. They do it better than I do. Much better than I do. I have no trouble interacting with them. I have no trouble ‘prompt engineering’ them. I have no trouble ‘programming’ them right? So, I think that's the thing that people are going to learn. They're all going to be CEOs of AI agents. [The key is] their ability to have creativity, some knowledge, how to reason [and] break problems down, so that you can program these agents.

Nvidia’s role in all of this is to help make the next market, which is a much larger opportunity than trying to take share from the existing computing market.

Jensen frames it as market makers vs. share takers. He bases this view of creating new markets from Nvidia’s history as a pioneer in 3D graphics during the late-1990s and early-2000s:

“If you look at our company slides, we don't show…market share. All we're talking about is how do we create the next thing. What's the next problem we can solve [for our clients]? How can we do a better job for people? […]

Companies are only limited by the size of the fish pond. So, the question is: what is our fish pond? That requires a little of imagination and this is the reason why market makers think about that future…Share takers can only be so big. Market makers can be quite large. […]

Since the very beginning of our company, we had to invent the market for us to go swimming in. [We were at] ground zero of creating the 3D-gaming PC market. We largely invented this market and all the ecosystem. All the the graphics card ecosystem. We invented all that.”

Nvidia isn’t only providing the picks and shovels to manufacture intelligence at scale. Jensen says he personally uses AI as a research assistant every day and the company uses it for design and security purposes.

What are the other AI markets to be made?

There has been a lot of AI-related news in recent weeks that shows the spectrum of potential use cases.

But before listing them out, I think it is important to remember the frame of “AI in 2024 is comparable to the internet in 1999”. Also, it’s important to remember that no single example of AI will explain the entire space.

First, the Good:

Nobel Prize: Google DeepMind’s Demis Hassabis and John Jumper won the Nobel Prize in chemistry “for their work developing AlphaFold, a groundbreaking AI system that predicts the 3D structure of proteins from their amino acid sequences.” Over 2 million scientists and researchers are using this powerful AI to “make new discoveries”.

NotebookLM: Another Google banger is a feature called Audio Overview within its NotebookLM tool. Basically, a user can feed up to 50 files or links and with the click of a button, Google will spit out ~10 minutes of a podcast between a male and female host. It’s pretty wild. The hosts talk naturally and are able to throw in a bit of humor. They weave interesting facts and insights about the documents uploaded. It’s not 100% correct but the output is extraordinary all things considered. Tesla’s former head of AI Andrej Karpathy called this new UX/UI a “ChatGPT moment” for AI audio and believes it previews a new format for learning and education.

The Bad:

Hurricane Helene: AI-generated photos of hurricane victims have been flying around social media. Even when users realize the photos are fake — and some are truly absurd — the outrage they create doesn’t go away.

Pig Butchering Scams: There are compounds in Southeast Asia — typically operated by Chinese gangs — that use slave labour to run online scams known as “Pig Butchering”. Regular people are tricked into these jobs and then spend 10 hours a day texting and messaging potential victims around the world by befriending or seducing them online and then finding a way to steal funds (either finagling bank details or asking for money to be sent). The ease of AI-generated images, video and text is leading to an increase in volume of these scams.

The weird AF (just why, why?):

Austin #1 restaurant on Instagram is fake: Someone created an Instagram page for a restaurant in Austin called “Ethos”. It has over 70k followers and posts delicious-looking dishes. And by “delicious”, I mean “completely fake and if you used your brain for 8 seconds you’d realize they AI-generated images of food” that get a ton of online engagement. There is no actual “Ethos” restaurant. The creator of this account is funnelling users to a merch shop and — to be fair — a lot of users are probably in on the joke. Although, it would probably be smart to capitalize on this hype and just go ahead an open a restaurant.

The absolutely massive opportunities:

Tesla vs. Waymo: I plan to write more on the self-driving car industry. In the meanwhile, it is worth flagging here because Tesla just had a “We, Robot” event where it previewed an autonomous Robotaxi, Robovan and humanoid robot called Optimus. These are years from commercial availability. However, I’ve used Tesla Supervised Full-Self Driving for 6 months and am fully convinced that autonomous vehicles will be everywhere within a decade. This belief also makes me believe in the high likelihood of autonomous robots everywhere. The current North American leader in autonomous driving is Google’s Waymo. Ben Thompson has a great breakdown on the different approaches between Tesla and Waymo for an autonomous car future. Either way, the demand for AI data centres will be massive in an autonomous vehicle world.

$100B+ AI Consumer Companies: Rex Woodbury has an overview on AI’s opportunity in the consumer market. OpenAI recently raised at a $157B valuation and is projecting $11B in revenue for 2024. This is a massive ramp up from $2B in 2023 and $4B in 2024, with the caveat that OpenAI is bleeding money and it’s not clear if its current business model can be profitable. One way to look at other opportunities is to see how AI can improve the experience for existing consumer services: dating (AI matchmaking can take on Tinder), travel (AI travel agents could take on Bookings) or music (AI songwriter can take on Spotify). Then, there are massive TAMs that are clearly ripe for AI-powered alternatives including online shopping ($16T) and games ($250B).

Apple, Meta and Smartglasses: A few weeks ago, Zuck Daddy Flex showed off Meta’s Orion glasses, which are still years away but previewed the extremely large TAM of a post-smartphone world. Interestingly, Zuck said that it was advancements in multi-modal generative AI that make the eyeglass hardware way more useful. In laypeople terms: you can use image, voice, text, or video as inputs and get useful outputs in any of those mediums (eg. you point the glasses to “see” something and it can use voice to “tell” you what it is and give options on what to do). The Apple Vision Pro has been a bust but there are reports that the iPhone maker is targeting lower-priced eyewear — which will be powered by Apple Intelligence — that would compete with Meta’s range of glass products.

Now, we can’t talk about artificial intelligence without addressing the elephant in the room: “artificial general intelligence” (AGI), or a form of machine intelligence that is superior to most forms of human intelligence across most type of tasks.

To get a better picture of how AGI might look, Dario Amodei — the CEO of Anthropic (an OpenAI competitor valued at $40B) — recently dropped a ~15,000 word blog titled “Machines of Loving Grace: How AI Could Transform the World for the Better”.

Dario’s approach to AI has largely been focussed on the safety side — which is a major concern — but this blog is his most optimistic vision for the industry.

He prefers the term “powerful AI” to AGI and goes on to explain:

By powerful AI, I have in mind an AI model—likely similar to today’s LLM’s in form, though it might be based on a different architecture, might involve several interacting models, and might be trained differently—with the following properties:

In terms of pure intelligence, it is smarter than a Nobel Prize winner across most relevant fields – biology, programming, math, engineering, writing, etc. This means it can prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc.

In addition to just being a “smart thing you talk to”, it has all the “interfaces” available to a human working virtually, including text, audio, video, mouse and keyboard control, and internet access. It can engage in any actions, communications, or remote operations enabled by this interface, including taking actions on the internet, taking or giving directions to humans, ordering materials, directing experiments, watching videos, making videos, and so on. It does all of these tasks with, again, a skill exceeding that of the most capable humans in the world.

It does not just passively answer questions; instead, it can be given tasks that take hours, days, or weeks to complete, and then goes off and does those tasks autonomously, in the way a smart employee would, asking for clarification as necessary.

It does not have a physical embodiment (other than living on a computer screen), but it can control existing physical tools, robots, or laboratory equipment through a computer; in theory it could even design robots or equipment for itself to use.

The resources used to train the model can be repurposed to run millions of instances of it (this matches projected cluster sizes by ~2027), and the model can absorb information and generate actions at roughly 10x-100x human speed. It may however be limited by the response time of the physical world or of software it interacts with.

Each of these million copies can act independently on unrelated tasks, or if needed can all work together in the same way humans would collaborate, perhaps with different subpopulations fine-tuned to be especially good at particular tasks.

Jensen called the AI data centre the new “computing unit”. Dario says the arrival of “powerful AI” will basically be a “country of geniuses in a data centre”.

When does he think it’ll happen? As early as 2026 (OpenAI CEO Sam Altman wrote in a blog post last month that he believes AGI is “a few thousand days” away).

Even if these timelines are delayed, the AI leaders are expecting some form of Nobel-level machine intelligence within the decade. Assuming powerful AI is fully aligned with humanity’s interests, we are all about to be CEOs of some incredibly smart AI agents.

For Dario, one of the major outcomes from human access to powerful AI is that we will quickly make significant breakthroughs in biology, medicine and neuroscience.

“Powerful AI” will help us compress 100 years of scientific discoveries into 5-10 years (and you only need a few discoveries to make a huge difference). To wit, these handful of scientific breakthrough in recent decades will reshape our future: CRISPR, CAR-T cancer therapies, mRNA technology and a massive reduction in the cost of genome sequencing.

In a best-case scenario, Dario — who has a background in biology — is hopeful that AI-powered scientific research can eliminate most cancers, create treatments for all infectious diseases, prevent Alzheimers and improve mental health within the decade after “powerful AI” arrives.

A single one of these outcomes would greatly improve the human condition, reduce healthcare costs and boost the economy through increased productivity.

After writing about the positive scenarios, Dario extrapolates his belief in the advance of powerful AI and recognizes that the entire economic system will have to be re-made:

While that might sound crazy, the fact is that civilization has successfully navigated major economic shifts in the past: from hunter-gathering to farming, farming to feudalism, and feudalism to industrialism. I suspect that some new and stranger thing will be needed, and that it’s something no one today has done a good job of envisioning.

It could be as simple as a large universal basic income for everyone, although I suspect that will only be a small part of a solution. It could be a capitalist economy of AI systems, which then give out resources (huge amounts of them, since the overall economic pie will be gigantic) to humans based on some secondary economy of what the AI systems think makes sense to reward in humans (based on some judgment ultimately derived from human values). […]

Or perhaps humans will continue to be economically valuable after all, in some way not anticipated by the usual economic models. All of these solutions have tons of possible problems, and it’s not possible to know whether they will make sense without lots of iteration and experimentation.

Whether or not the tech industry answers the “AI’s $600B question” or Nvidia stays dominant, the die has been cast and there are many more important challenges to address.

When the Telecom Bubble burst, we were left with $1 trillion of telecom equipment — not to mention the turning back on of nuclear power (for abundant clean energy) — that set the stage for the next wave of internet, mobile and cloud computing.

After this rush of AI infrastructure spend and research, we will be left with the ability to manufacture intelligence at scale. Even if it feels a bit bubbly and overhyped right now, the ground truth is that the current wave of AI will change every aspect of society…except making me a better stock picker (doesn’t matter how powerful the AI is, this will never happen).

Links and Memes

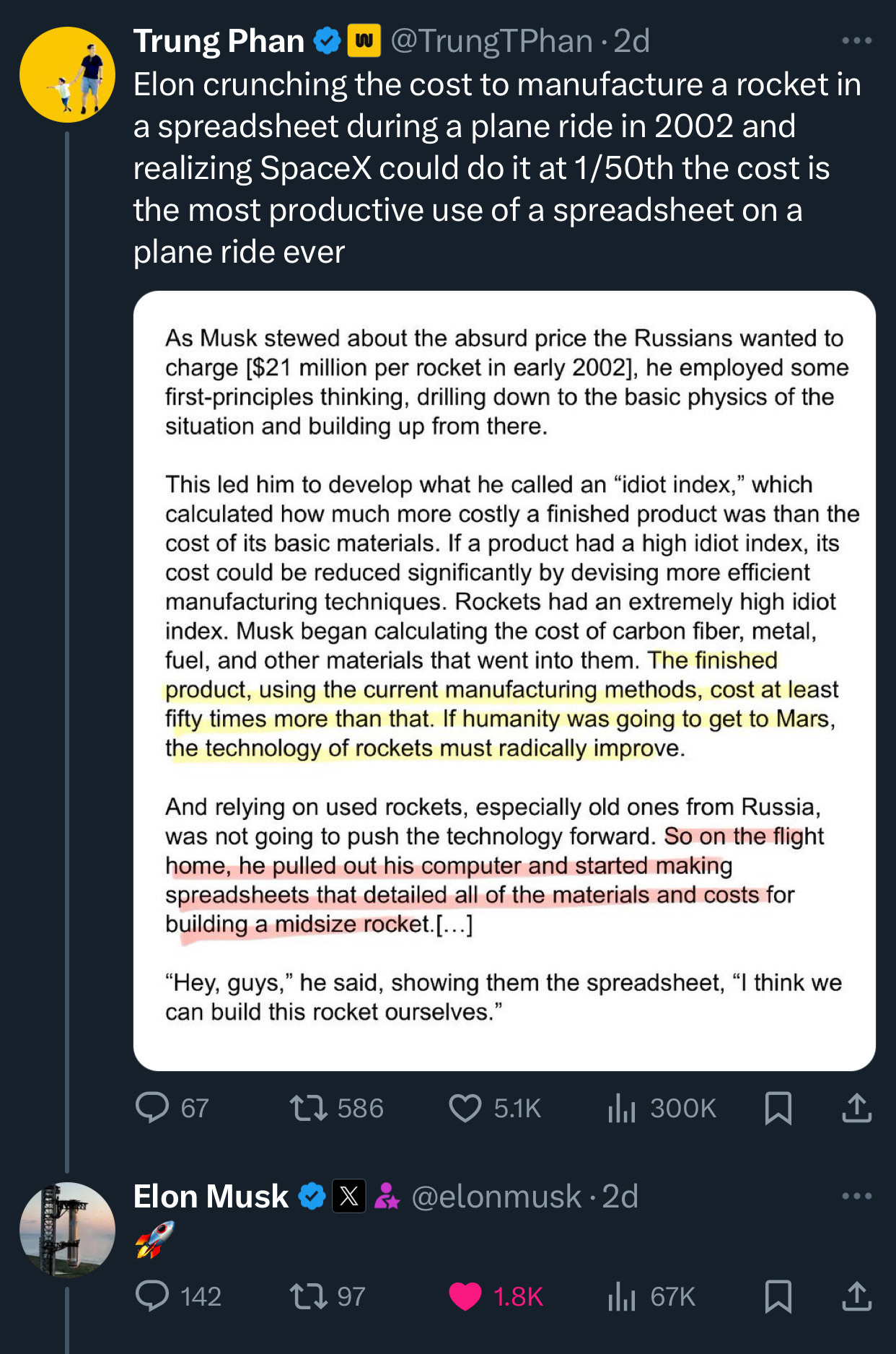

SpaceX’s Starship Miracle: Imagine a 398-foot steel skyscraper — weighing 11,000,000lbs — launched into space. Then, 7 minutes later, a segment of the skyscraper falls back to earth and is caught by mechanical arms on a 498-foot tower.

Well, that’s basically what happened with the 5th launch of SpaceX’s Starship vehicle, which is the largest and most powerful rocket ever built. The rocket was sent into space, and its Super Heavy booster returned to earth and was caught by the “Mechazilla” launch tower with two mechanical arms called “chopsticks”.

An incredible achievement by Elon, COO Gwynne Shotwell and the SpaceX team. And probably the most insane thing I’ve seen in 2024 (for reference: I’m on my 5th attempt at Duolingo Spanish and can still only say “Donde estan tacos?”).

Why is this important? A key metric for the space economy is the cost to send payload into space. Here is some rough napkin math. The Saturn V — which launched the Apollo 11 moon mission in 1969 — was $10,000 - $15,000 per pound. SpaceX’s current workhorse re-usable workhorse rocket is $1,200 per pound. Starship could get that number down to ~$100 per pound.

A cheaper cost of transportation completely transforms what can be done in space. Let’s just say that a horse buggy and sailboat wouldn’t be able to deliver me my Amazon same-day deodorant (but modern air travel, highway trucking and last-mile delivery can).

The most visible use of SpaceX’s current re-usable Falcon 9 is the Starlink low-earth orbit satellite constellation for internet (and also ferrying astronauts and cargo to the International Space Station). At ~$100 per pound, we can legitimately start looking at space tourism, mining, manufacturing, solar energy and making Mars a back-up home for humanity.

MORE: A 22-minute YouTube breakdown of the Starship catch, a must-read on how Starship will change the global economy, and one viral perspective on why Elon is able to motivate the smartest rocket engineers in the world.

Taco Bell is a great franchise business: The Invest Like The Best podcast had a fascinating interview with Matt Perelman and Alex Sloane. The two investors dropped out of Harvard Business School to found a franchise investing business, including one of the largest Burger King operations in the world (man, I love Junior Whoppers; not full Whoppers but Junior Whoppers).

When asked about opportunities they missed, Perelman said that the duo passed on Taco Bell a bunch of times because it was seemingly "too expensive. They regretted it because Taco Bell is a huge outlier in the F&B franchising space:

Taco Bell is the darling of the franchisee investment universe. It only comps positively, meaning same-store sales always seem to grow. It has higher-level margins than the competitors. Typically, new units are created at really attractive cash-on-cash returns. Their model always seems to work. [...]

Two things [as to why Taco Bell is such an outlier]. They have better marketing than most. Think about the various Taco Bell iterations of commercials and marketing that you can think of, all the way from the Chihuahua up to Pete Davidson recently.

And they have a really compelling operating model. Their food is reheated, so whatever they don't sell that day, they're able to reheat and sell the following day. Which we can all debate how delicious that is or not, but it does drive — by the way, it tastes amazing — it seems to work. And marketing drives a higher top line. Their food cost model drives higher store-level EBITDA margins that allow for more money to be reinvested in the boxes so they look better. And the top line drives more advertising dollars, which forces more people to come in.

Human beings, at least in America, are very Pavlovian. More Taco Bell commercials on TV this month than the same month the prior year—there's probably gonna be more people who show up to Taco Bell. And with comps that have gone up every single year for twelve years, that's really driving that flywheel.

I never thought about the “re-heat” food angle and am actually not that mad about it (I also don’t eat at Taco Bell a lot, so maybe I would be more mad if I did). GREAT operating model, though.

***

…and them baller links:

“How Bogg Bags, the Crocs of Totes, Won Over America’s Moms”: I totally missed the rise of this product, which saw sales climb from $4m in 2019 to $100m in 2024. I also missed the rise of the Stanley Cup tumbler, which is probably explains why no consumer VC fund will return my cold DMs.

Anyway, Bogg Bag was founded founded in 2009 by Kim Vaccaerla, who was working as a controller at commercial real estate lender. When she couldn’t find a “roomy, lightweight, easily cleaned tote bag”, she decided to make one herself (basically using her family’s entire $30k in savings for first big inventory shipment in 2012). Incredible side hustle turned main thang. (Bloomberg)**Bearly AI App Update**: The productivity app I'm building doesn't use Croc materials, but does have an easy-to-use interface to save you hours on reading, writing and research work. Use code BEARLY1 at this website for a FREE month of the most powerful text, image and voice AI models from Google, Meta, OpenAI and Anthropic.

“The Guru Who Says He Can Get Your 11-Year-Old Into Harvard”: Wild story of a guy who runs an Ivy League admissions company that is valued at $554 million. In high school, the founder New Zealand founder Jamie Beaton had straight As while taking more than 2x the required course load. He also launched two businesses and won a national debate competitions. Beaton got into 25 top universities and basically turned his college admissions strategy into a business.

His company Crimson Education now serves 8,000 clients paying up to $200k a year (with kids starting at age 11). Beaton also has masters or PhDs from Stanford, Oxford and Harvard. Not a huge fan of these operations — since they completely warp the playing field for college applicants — but I’m more inclined to blame the Ivys for not opening up more seats and creating this insane status game that parents are willing to pay through the nose for. Don’t hate the player…(Wall Street Journal)Speaking of status...here’s a good theory on it: Economist Tyler Cowen says a mark of talent is knowing which status hierarchies to climb. What does this mean? Aaron Renn has an insightful write-up on X using his own life as an example. Growing up in the Mid-West, he played a Midwestern status hierarchy game. This meant going to a great school in the region, Indiana University (but not applying to Harvard), then getting a solid corporate job at Accenture (but not applying to McKinsey), then moving to Chicago (instead of New York).

He’s not shitting on his own career but just pointing out that he didn’t even realize the other status hierarchies existed because no one told him. He ends with this general lesson: There's an old saying,“ Without awareness, there is no choice." You are going to be working to climb some status hierarchies. The question is whether you actually made a conscious choice or just drifted into it by default. (X post)

…here some posts: