The Camera, Vincent Van Gogh and "The Starry Night"

How the invention of the camera changed art and led to Van Gogh's classic painting.

Thanks for subscribing to SatPost.

I was in Las Vegas for my kid’s spring break when this wild Studio Ghibli ChatGPT thing popped off. Was so busy getting my face ripped off by $12 bottles of water that I didn’t get a chance to write about it yet.

So, today’s e-mail will be a catchup on my thoughts including an update on a previous related essay (The Camera, Vincent Van Gogh and "The Starry Night") and 36 bullet points on the Studio Ghibli ChatGPT viral moment (and the best posts).

Also this week:

Tariff and Trade War Stuff

Chipotle, Adolescence, EssilorLuxottica, RIP Val Kilmer

…and them wild posts (including a really good April Fool's prank)

PS. I also typed up 3,000+ words on the creative process for Studio Ghibli co-founder Hayao Miyazaki but will send that next week.

This issue is brought to you by Liona AI

Launch a GPT Wrapper in Minutes

As many of you SatPost readers may know, I’ve been building a research app for the past few years (Bearly AI).

Over that span, I’ve drank over 1,347 coffees while my co-founder built a flexible backend to manage all of the major AI APIs. We turned that backend into a product called Liona AI.

This easy-to-use platform lets your users and teams connect directly to OpenAI, Anthropic, Grok, Gemini, Llama, Cursor and more while you maintain complete control over security, billing, and usage limits.

My two favourite paintings are Vincent Van Gogh’s “The Starry Night” and a face portrait of me that a Vietnamese street artist drew that made me look like I had 6% body fat.

The former painting is a great lesson on how technology changes art and creative work (while the latter is the best 125,000 Vietnamese Dong I’ve ever spent).

Van Gogh created his masterpiece in 1889. But the actual painting style for “The Starry Night” was born from the art world's response to the invention of the camera decades earlier.

To trace a connection between the camera and “The Starry Night”, we start with Van Gogh’s early years: the Dutch painter was born in 1853 in Zundert, Netherlands and had a fascination with art from a young age. His interest included a short stint as an art dealer. But through his teens and 20s, poor mental health hampered Van Gogh’s ability to focus and kept him from holding a job. In 1881 — at the age of 28 — he moved back in with his parents (I briefly did the same in my late-20s but have yet to paint an art masterpiece).

With financial and emotional support from his younger brother Theo, Van Gogh began to paint more seriously. The two brothers frequently exchanged letters, providing future generations with an insight into Van Gogh's psyche.

Van Gogh's early works were character and setting studies. Compared to his more famous art, there was a lack of color in these pieces. But this drab style was very much in line with the post-Enlightenment norm of painting scenes as a form of documentation (aka look as real as possible).

However, painting as documentation would become a lot less important after the arrival of the camera.

For that story, let's rewind back to 1839 and the invention of the Daguerre camera. It was the first camera developed for commercial use and named after French artist Louis Daguerre (who invented a new type of photochemistry). While the Daguerre camera required 30 minutes of light exposure to produce a photograph, that was a much shorter amount of time than the hours required of photography devices from the early decades of the 1800s.

Below is the Daguerre camera and what is believed to be the first Daguerreotype photograph of a human face, taken in 1839 (the subject is Dorothy Draper and the photographer is her brother John).

Camera technology advanced quickly and the ability to capture real-life images no longer required an artist. Up to this point, the norm for having “a portrait done" was to commission a painter and say “yo, can you paint my face and make it look like I have single-digit body fat %?”. Portrait painting of everyday people was not a particularly glamorous business. It was a workmanlike trade and comparable to a profession like carpentry. The highest esteem for artists was to paint historical settings and figures.

As with so many inventions during the Industrial Revolution (steam engine, railway, electricity, telegraph, spinning jenny and the word “dude”), the camera led to rapid social and economic change.

How did the creative community receive the camera news? French poet Charles Baudelaire expressed the mood by saying “[photography is] art’s most mortal enemy…that upstart art form, the natural and pitifully literal medium of expression for a self-congratulatory, materialist bourgeois class.”

Meanwhile, French painter Paul Delaroche came off the top rope when he first saw the Daguerre camera: “from today, painting is dead.”

By 1849, more than 100,000 Parisians — about 10% of the population — had a portrait taken by camera per the Kiama Art Blog. This meant that there was a ready substitute for one of the most utilitarian art jobs. On the one hand, artists that didn’t take up photography lost a source of income. On the other hand, many artists were freed to try new painting techniques.

But it wasn’t until the 1870s that the art world truly changed in response to the invention of the camera. An art professor on the subreddit r/AskHistorians provides a detailed breakdown, specifically focusing on the rise of Impressionism:

[By the 1870s and 1880s] they are a generation of artists who have lived with photography essentially their whole lives. Furthermore, photography was one of many new 19th century technologies that prompted advances in the science of optics, and the Impressionists were by and large immersed in this new world.

Rather than try to compete with photography for claims to objective truth, they were in essence freed by it. Aware of advances in the understanding of color, for example, they knew that by placing areas of pure color next to one another, they could produce a blended effect in the mind, which is how they developed their distinctive facture (facture is the art historical term for an artist’s unique handling of the paint).

In essence, they doubled down on the subjective nature of art, the way in which it represents not some universal perception but rather an individual one. They translated their own experience of the world at any given date and time into paint. And in the process they changed art forever. By decoupling painting and objective vision once and for all, they inaugurated what we art historians know as high modernism, of all those avant-garde isms you may be familiar with, from Impressionism, to Fauvism, to Cubism and on to the complete abstraction of the middle of the twentieth century.

It is not too much of an exaggeration to say that photography indirectly helped develop modern and postmodern art as we known it.

Big Boss Impressionist artists such as Claude Monet tried techniques that were different from what a camera could capture (playing with light, color and brushstrokes). Monet’s most famous works were reality filtered through his subjective lens.

Back to Van Gogh: he followed his brother Theo to Paris in 1886 (then Arles, France in 1888). In France, Van Gogh was exposed to Monet and other Impressionists. The budding artist transitioned from his earlier dark Dutch painting style to a brighter palette and created art that translated the world through his personal lens.

Unfortunately, he suffered another mental breakdown and mutilated himself in the infamous “ear-cutting” incident. He spent months in a hospital before checking into a psychiatric institution in Saint-Rémy, France in May 1889.

A year earlier in 1888, an American named George Eastman released the first commercially successful roll film hand camera. Eastman's company had one of the greatest corporate names ever if you are a fan of strong-sound letters at the beginning and end of a word: Kodak (one fan: Spanx founder Sara Blakely named her company as such because of the strong C/K sounds found in “Kodak” and “Coca-Cola”).

At this point of Van Gogh’s timeline, the artist had lived through the early days of the camera up to its full-on adoption by amateurs (the original Kodak camera motto was “we really hope the iPhone doesn’t take us out of business in 120 years” “You press the button, we do the rest”).

Painters had gone from competing with cameras to being intrigued by the outputs and experimenting with entirely new styles. Van Gogh initially derided photographs as “mechanical” before saying “‘ah, what portraits we could make from life with photography and painting.”

While at the Saint-Rémy asylum, Van Gogh — who is now considered a Post-Impressionist artist during this period (these painters combined the bright colors of Impressionism with more subjective feelings) — entered his most creative era. As part of the healing process, the institution took a relatively progressive approach and believed it was crucial to surround patients with nature. Van Gogh spent the next year on a strict schedule and made 150 paintings including many landscape works that were inspired by the view from his asylum bedroom.

One such painting: “The Starry Night”, which Van Gogh created on the evening of June 23rd, 1889.

As detailed by an insightful video from Great Art Explained, Van Gogh made the painting in a studio room (different than his bedroom view). It was actually done during the daytime and meant he painted it from memory (aka very subjective).

To be sure, Van Gogh drew from many inspirations outside of Impressionism. He was a fan of Japanese artist Katsushika Hokusai, who painted “The Great Wave”. And side effects from an epilepsy drug (digitalis) may have lead to a yellow tinge in Van Gogh’s vision.

Either way, it was not the first time Van Gogh painted a starry night sky. He previously did two other ones that you have probably seen:

“Cafe Terrace at Night” (September 1888)

“Starry Night Over The Rhone” (September 1888)

Notice that the night sky in these paintings are much more “realistic” than the one Van Gogh painted while at Saint-Rémy. Van Gogh felt strongly that the realism was important. In fact, Van Gogh wrote to French painter Emile Bernard that “The Starry Night” was a failure because it was too abstract and unrealistic.

Within 14 months after the completion of “The Starry Night”, Van Gogh was dead from a self-inflicted gunshot wound. He was 37 years old and had only sold one painting during his life.

Today, “The Starry Night” is displayed at The MOMA in New York. It is the 3rd most visited painting in the world (after Mona Lisa and The Sistine Chapel). Turns out that Van Gogh's most subjective painting became his most enduring work.

Famed art historian Meyer Schapiro remarked that Van Gogh created the painting under the “pressure of feeling.” My favourite take comes from astrophysicist Neil deGrasse Tyson, who has a beautiful explanation as to why Van Gogh’s masterpiece has so resonated:

“You know what I like about The Starry Night?

It’s not what Van Gogh saw that night, it’s what he felt.

[The painting] is not a representation of reality and anything that deviates from is reality that has filtered through your senses. And I think art at its highest is exactly that.

If this was an exact depiction of reality, it would be a photograph and I don’t need an artist.”

Since the release of ChatGPT in November 2022, I’ve come back to Tyson’s line every time there is a new AI breakthrough in writing, images, audio or video: “It’s not what Van Gogh saw that night, it’s what he felt.”

The invention of the camera changed how artists painted. Likewise, these AI tools will change all manner of human creative outputs…for better and for worse. It’s inevitable, though.

A recent example was when ChatGPT released its powerful new image-generating model. Users could upload any photo and quickly have it changed into different styles. One style that really took off was the anime aesthetic made popular by Studio Ghibli, the legendary Japanese animation firm co-founded by Hayao Miyazaki. Every imaginable image, meme and historical moment was “Ghibli-fied”, including this one of Van Gogh painting “The Starry Night” that I just generated (sorry).

I share more thoughts on the Studio Ghibli viral moment below. For the purpose of this essay, I still believe that this wave of AI will ultimately augment our creativity as opposed to replace it in writing, images and video.

That’s why the story of “The Starry Night” is so relevant. It’s a reminder of what creative work — with or without the assistance of technology — is worth being made and worth us caring about.

In the face of ever-improving AI models, the only thing I know for sure is that each person will be able to cultivate one competitive edge: yourself. You need to express yourself more. Your stories. Your struggles. Your life more. Your quirks more. Your humor more. Your filter more.

AI is a tool. Don't just take the output. Add yourself into the process. Make decisions. Create something new. Just be sure to translate your own experience into the work. Because if you are only expressing what can be “seen”, technology has it covered. But what can be “felt”? That is still uniquely ours.

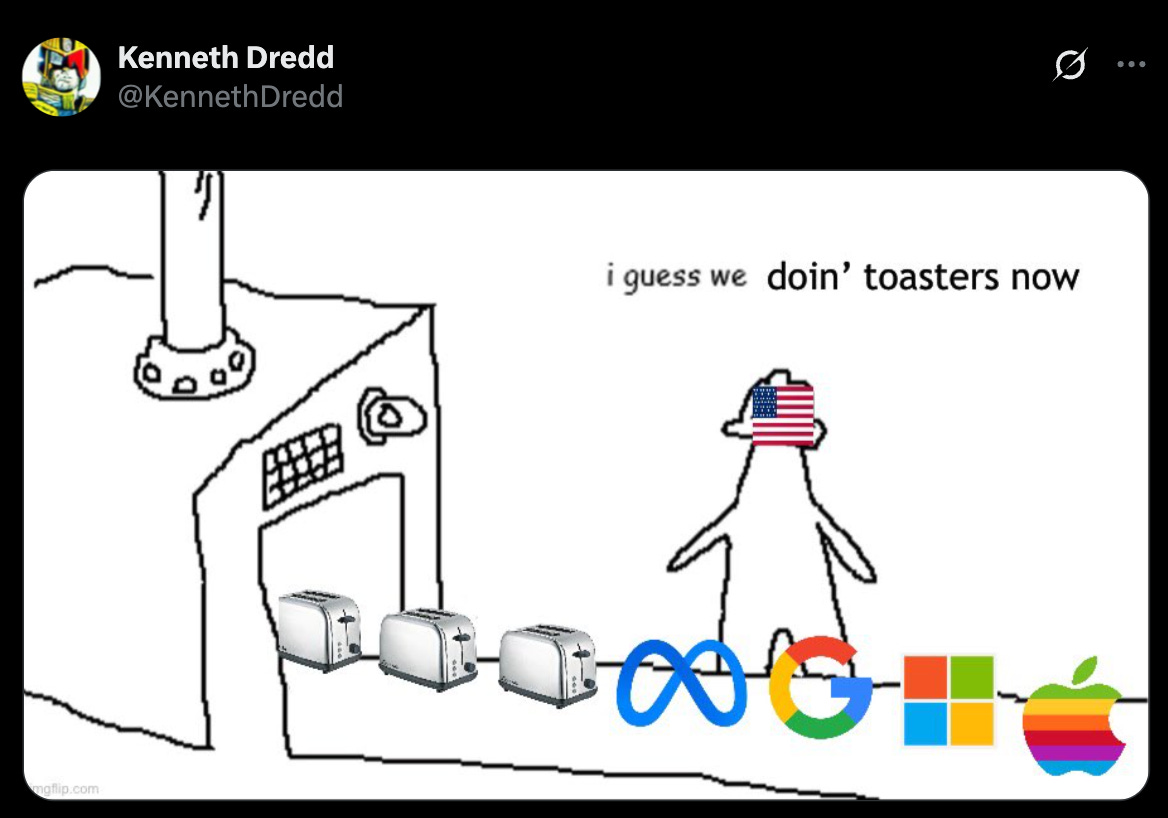

36 Other Bullet Points on the Studio Ghibli ChatGPT That Popped Off While I Was In Las Vegas Getting My Face Ripped Off By $12 Waters

The Studio Ghibli viral moment popped off with this post from Grant Stanton, who ran a photo of him and his wife through ChatGPT’s new image model. He kicked off OpenAI’s most bonker viral cycle other than the first week of ChatGPT and the weekend Sam Altman got fired. 51 million views!!! TREMENDOUS ALPHA.

The new ChatGPT image model is a legit breakthrough. Previous models required users to write an elaborate text prompt that off-loaded the task to a separate image generation tool. The result was often non-sensical words, weird body parts and always something “off” about the art. Wharton Professor Ethan Mollick explains how the tech works: “[In] multimodal image generation, images are created in the same way that LLMs create text, a token at a time. Instead of adding individual words to make a sentence, the AI creates the image in individual pieces, one after another, that are assembled into a whole picture. This lets the AI create much more impressive, exacting images.”

The improvements take away a lot of the friction. Instead of “huh, what 50 descriptive words do I type in this box?”…you can take any image and just ask “please make this Studio Ghibli style” and that's it. When Google took off in the early-2000s, a large part of the appeal was the simplicity. Just type in the search box. The competitors were way more cluttered and harder to parse for normies.

Between the tech and the ease-of-use, this allowed people to “Ghibli-fy” an images of every possible idea in the next 96 hours (my personal faves — which I’ll share at the bottom of this section — were the meta memes using Ghibli images to meme the absurdity of the moment).

There were two very strong reactions to the Ghibli images: 1) people excitedly getting in on the fun (OpenAI CEO Sam Altman said the GPUs were “melting” and later added “the chatgpt launch 26 months ago was one of the craziest viral moments i'd ever seen, and we added one million users in five days. we added one million users in the last hour.”; and 2) a lot of very very angry messages about how OpenAI was releasing slop on the world and stealing art and putting artists out of work.

Re: the people getting in on the fun, it seems like OpenAI picked up a lot of “normie” users including probably your aunt and uncle. A common theme in tech history is a fun use case as a gateway to user adoption. Many people linked to a related 2010 article from VC Chris Dixon titled “The next big thing will start out looking like a toy”.

Re: the angry takes, there’s a bit to unpack. A bunch of legal experts chimed in and said that you can’t actually copyright a “style” such as anime. However, OpenAI — and most of the other large AI labs — have been caught training their text/video/image models on copyright material. Altman said they “we put a lot of thought into the initial examples” for the image generator and changed his profile pic to a Ghibli image. Clearly, if they took thousands of Ghibli frames, the studio should be compensated. This is all part of the “real-time figure out how the economics of AI works” we’ve dealt with in past 3 years.

It makes total sense why artists are aggrieved. A lot of related creative work (graphic design, animation, drawing etc) was already facing headwinds from new tools and cheap labor prior to the ChatGPT Moment. A lot of these artists are freelancers without full job security. AI just adds to the pain. It's not just visual artists, though, writers and knowledge workers are feeling the crunch.

The skills I’ve spent my professional life building are very much in the bullseye. I tested Deep Research last month and was like “wow, this is as good as 76% of the things I did in the first 10 years of my career of knowledge work.”

Trust me, I’m taking the lessons of “The Starry Night” very seriously. Any longtime SatPost reader will know how much I like shoe-horning my dad jokes I into otherwise informative text. That’s me trying to differentiate my work from what AI can crank out in seconds.

Also important: distribution and relentlessly shilling your work because no one else will do it for you.

Back to the Studio Ghibli brouhaha. It’s not like the millions of people that made Ghibli images were going to have that artwork commissioned. Probably a single-digit number of people woke up on March 25th and said, “I’m going to find someone and pay them $50 to draw an image of me and my wife and my dog at the beach in Ghibili style”.

Studio Ghibli itself has been exposed to millions of people that otherwise never heard of them.

On the last point, many pointed out that Studio Ghibli co-founder Hayao Miyazaki was once shown an AI art render in 2016 and replied with “I am disgusted. I would never wish to incorporate this technology into my work at all. I strongly feel that this is an insult to life itself.” That was based on a dogshit image, though.

Would Miyazaki change his mind now? Well, he’s 84-years old and famously avoided technology, opting to draw and paint his films by hand. He is also friends with Pixar’s John Lasseter and loved the intro to Up. He almost certainly wouldn’t use the current AI tools but if someone made something worthy with it, I don’t think he’d hate it either.

Similarly, Steve Jobs once said of Pixar: "no amount of technology will turn a bad story into a good story".

The best criticism I read of “Ghibli-fication” was by Erik Hoel. He refers to it as the “semantic apocalypse”. Basically, generative AI is getting so good at making decent-sounding text and decent-looking images that people are getting flooded by the content and it is “draining meaning”. Imagine my kid. He hasn’t ever seen a Studio Ghibli film. What if he seems 10,000 “Ghibli-fied” images before he watches Spirited Away or My Neighbor Totoro. Those films — as beautiful as they are — will probably hit different than if he had never seen the images.

I think a relatable example for everyone is the Mona Lisa. The average person will probably have seen that image 10,000x in every type of context before actually visiting The Louvre. The magic and meaning is kind of taken away.

The meme-ification of everything does kind of suck in that light. I’m a huge culprit of trying to turn any piece of “art” or news-y moment into a piece of bit-sized content. Everything is flattened. Culture, politics, finance, entertainment etc into an endless feed with little time for reflection. Spend a day off the internet and you miss the “craziest meme story ever”, which is forgotten 12 hours later. This is unfortunately the internet in 2025. It is hella fun in the moment, though. So much dopamine.

On a related note, I’ve posted 100s of Succession, Breaking Bad and Mad Men memes online. Is this so different than people using ChatGPT to make “Ghibli-fied” images? In both cases, you’re taking a piece of content popularized by someone else and using it as a new form of media (that is out of context and without compensation to the original creator).

Someone will read the last point and say, “yeah dumbass, it’s a cheap way to get online engagement and isn’t art.”

That someone would be correct.

However, I think we need to separate the idea of “art” from these AI outputs.

Seems a majority of these AI output probably aren't mean to be "art". To be clear, I don't mean "art" as "hang it up in the MOMA". I mean "art" as someone creating something to express an emotion they feel or elicit an emotion from someone else or to teach a lesson or to bring joy.

Which raises the question, what makes art? Don't some prompted AI works achieve this goal? I think the key is that the creator has to put thought and effort into the output. One framing I like is from legendary sci-fi author Neal Stephenson. In an article titled “Idea Having is not Art: What AI is and isn't good for in creative disciplines”, he believes art requires microdecisions:

"An artform is a framework for a relationship between the artist and the audience. Artist and audience are engaging in activities that are extremely different (e.g. hitting a piece of marble with a chisel in ancient Athens, vs. staring at the finished sculpture in a museum in New York two thousand years later) but they are linked by the artwork. The audience might experience the artwork live and in-person, as when attending the opera, or hundreds of years after the art was created, as when looking at a Renaissance painting. The artwork might be a fixed and immutable thing, as with a novel, or fluid, as with an improv show.But in all cases there is an artform, a certain agreed-on framework through which the audience experiences the artwork: sitting down in a movie theater for two hours, picking up a book and reading the words on the page, going to a museum to gaze at paintings, listening to music on earbuds.

In the course of having that experience, the audience is exposed to a lot of microdecisions that were made by the artist. An artform such as a book, an opera, a building, or a painting is a schema whereby those microdecisions can be made available to be experienced. In nerd lingo, it’s almost like a compression algorithm for packing microdecisions as densely as possible.

Artforms that succeed—that are practiced by many artists and loved by many audience members over long spans of time—tend to be ones in which the artist has ways of expressing the widest possible range of microdecisions consistent with that agreed-on schema. Density of microdecisions correlates with good art."

AI can help make microdecisions. I use ChatGPT, Claude and Grok all the time as research assistants to find info and bounce ideas off of. But it's always an input to my process. AI is a tool. Don't let it stop you from making microdecisions. Here is a recent viral article from a professor at a regional university. He’s talking about his Gen-Z students and it’s grim. Social media has zapped their attention and they’re outsourcing cognitive functions to ChatGPT. Just like your muscles, your mind needs exercise. Always be making microdecisions.

OpenAI says that 130m users have generated 700m images in the past week. I'm sure the majority wasn't intended to be "art". That's fine. I've used countless photo apps over the years to have fun by filtering some image and sending them to small group chats. Not everything has to be "art". There is obviously total bottom-of-the barrel stuff too. A lot of the output is just straight up slop. Low effort engagement bait with zero redeeming features (like Subway’s footlong cookie).

But if you do care about craft and art, then it’s important to always be making microdecisions. To wit: each Studio Ghibli film has 60-70,000 frames and Miyazaki has to greenlight every single one. Example: It took his team 15 months to make this one 4-second clip. Insane.

Speaking of slop, the Ghibli trend was a wrap when White House X account completely jumped the shark and posted this.

Here’s an example of using Studio Ghibli ChatGPT for something new. PJ Ace spent 9-hours taking 100+ scenes of trailer for The Lord of The Rings and “Ghibli-fied” it. Somewhat ironically, Miyazaki hated the Peter Jackson trilogy because he thought the battle scenes were too video-gamey and didn’t show the complexity of war. But, clearly, there were many microdecisions made here. It’s not just a 3-second prompt output.

Also, this is pure stream of consciousness (so, sorry for typos).

Media analyst Doug Shapiro has a good series of articles on Hollywood and the future of AI video. He says a useful way to think about AI and industry disruption is to consider a 2x2 matrix. The axes are “technology development” and “consumer acceptance”. One study he finds is that people that use AI more are more willing to accept AI outputs. Something to think about long term.

Shapiro also has a great quote on what is valuable in a world where AI makes infinite content but there is still finite demand: “The economic model of content creation shifts radically, as video becomes a loss leader to drive value elsewhere—whether data capture, hardware purchases, live events, merchandise, fan creation or who knows what else. The value of curation, distribution chokepoints, brands, recognizable IP, community building, 360-degree monetization, marketing muscle and know-how all go up.”

Aside from art, the new ChatGPT model has interested use cases as shared by Professor Ethan Mollick: Visual recipes, homepages, textures for video games, illustrated poems, unhinged monologues, photo improvements, and visual adventure games, to name just a few.

Second last post. An interesting take on how ChatGPT totally remakes buying real estate properties.

Last post is this insightful thread from Balaji Srinivasan:

A few thoughts on the new ChatGPT image release.

(1) This changes filters. Instagram filters required custom code; now all you need are a few keywords like “Studio Ghibli” or Dr. Seuss or South Park.

(2) This changes online ads. Much of the workflow of ad unit generation can now be automated, as per QT below.

(3) This changes memes. The baseline quality of memes should rise, because a critical threshold of reducing prompting effort to get good results has been reached.

(4) This may change books. I’d like to see someone take a public domain book from

Project Gutenberg, feed it page by page into Claude, and have it turn it into comic book panels with the new ChatGPT. Old books may become more accessible this way.

(5) This changes slides. We’re now close to the point where you can generate a few reasonable AI images for any slide deck. With the right integration, there should be less bullet-point only presentations.

(6) This changes websites. You can now generate placeholder images in a site-specific style for any <img> tag, as a kind of visual Loren Ipsum.

(7) This may change movies. We could see shot-for-shot remakes of old movies in new visual styles, with dubbing just for the artistry of it. Though these might be more interesting as clips than as full movies.

(8) This may change social networking. Once this tech is open source and/or cheap enough to widely integrate, every upload image button will have a generate image alongside it.

(9) This should change image search. A generate option will likewise pop up alongside available images.

(10) In general, visual styles have suddenly become extremely easy to copy, even easier than frontend code. Distinction will have to come in other ways.

Below are my favourite Ghibli posts. At one point, you could post literally anything on social media — like a Ghibli-fied banana — and it would go nuclear. I appreciated the Ghibli posts that had a meta angle (like this classic image of an exasperated Miyazaki):

Tariff and Global Trade War Stuff

On Wednesday, President Trump rolled out reciprocal tariffs on 150+ countries around the world. The plan is ostensibly to force other countries to change their trade policies including lowering tariffs and altering non-tariff barriers (quotas, licensing requirements, and technical regulations, currency manipulation).

My friend Rohit pointed out that its looks like AI was used to determine the tariff formula, making it the "first large-scale application of AI technology to geopolitics." When asked how to generate reciprocal tariff rates for all of America's trading partners, the AI models converged on a formula that was "[America’s bilateral trade deficit with a country] divided by [imports from that country]". The US divides that figure by 2 and that is the “reciprocal tariff” (with a minimum tariff of 10%). It’s about as “quick and dirty” of an analysis that you could possibly make. Not a ton of consideration for non-tariff barriers.

Supporters of the tariffs note that if the goal is to spark quick negotiations (tariffs go into affect April 9…but that also seems open to change), then putting out a quick “opening offer” is the way to do it. And if there’s one thing we definitely know about Trump: he LOVES deals.

But honestly, who knows what’s going on. I still need to process everything that happened and wipe the crusted tears off my laptop keyboard from when I looked at my portfolio (US markets are down $10 trillion since Trump was elected).

In the meanwhile, here’s a link round-up:

Tariff Q&A: Welcome to the Actual Inbox: Kyla Scanlon has a good FAQ primer on tariffs.

The White House Whirlwind That Led to Trump’s All-Out Tariff Strategy: The Wall Street Journal has a play-by-play in the lead-up to “Liberation Day” …and the details of the official reciprocal tariffs were very rushed.

Trump’s Tariff Gambit: Debt, Power, and the Art of Strategic Disruption: Tanvi Ratna has a balanced take on the high-wire act the Trump administration is trying to pull off: reduce debt, re-shore manufacturing, reshape geopolitical relationships. If it doesn’t work, lots of inflation, retaliation and lost mid-terms.

Liberation Day and The New World Order: Doug O’Laughlin looks at the history of the American-led global trade order. Recent actions means it’s over and his pessimistic on what comes next.

Wall Street thinks Trump's tariffs will eat Main Street alive: Nate Silver looks at the performance of individual stocks on the first trading day after tariffs were announced. Then extrapolates what that says about economic expectations and consumer confidence (people buying booze, watching Netflix and pausing vacations).

Treasury Secretary Scott Bessent…went on the Tucker Carlson podcast the day after the tariffs were announced. Worth hearing the ratoinale straight from the source. Bessent says he’s “not happy” with the market sell-off but repeatedly talks about Main Street in the conversation noting that “top 10% of Americans own 88% of the equities”. He believes the totality of government efforts will benefit Main Street in longer term.

A $2,300 Apple iPhone? Trump tariffs could make that happen: The iPhone maker has been absolutely clapped. Lost $500B market cap in two days and now valued at $2.8T. Since Trump 1, Tim Cook has been diversifying the supply chain out of China due to fears of a trade war. He moved manufacturing to India and Vietnam, but both those countries got hit by tariffs (46% and 26%, respectively). Apple prices may very well be going up.

Trump’s massive 46% Vietnam tariffs pummel Nike, American Eagle and Wayfair…and Restoration Hardware, and Decker Brands (Hoka) and Lululemon. The Vietnamese government has already begun negotiations. Many more are doing the same before the April 9 deadline. CNBC has a look at how Asian supply chains might be affected.

Last thing: a 10% tariff was put on Heard Island and McDonald, which has a population of 0 people and is inhabited by penguins….incredible fodder for posting:

Links

Chipotle's Avocado Supply Chain: The Wall Street Journal has a solid piece on how Chipotle has been trying to diversify its avocado imports away from Mexico over the past 7 years. The plan wasn’t meant to avoid tariffs but has given the Mexican fast casual chain more flexibility now that we’re on the cusp of a trade war (side note: Trump's reciprocal tariffs are much lower for Latin American countries...the administration really wants more of the supply chain back in its hemisphere).

Chipotle buys about 5% of all avocados in the United States, which is worth $150m+ a year per That's equivalent to 132 million pounds across 3,700 stores.

Overall, Mexico accounts for 90%+ of all US avocado imports. Chipotle was so concerned about supplier risk that it started looking at alternatives way back in the mid-2010s.

The diversification efforts looked for countries near the equator that could support "the sun-hungry plants" and involved some serendipity:

Another hurdle [in sourcing]: Hass avocados, America’s preferred variety, differ from those native to many Central and South American countries, which traditionally have a lower oil content and more muted flavor. That meant years of work with new suppliers to raise fatty avocados that met the chain’s expectations.

In 2018, [Carlos Londono, Chipotle's head of supply chain] was looking at Colombia when he was introduced to an up-and-coming avocado producer in Medellín called Cartama. Londono quickly realized he had known the company’s CEO, Ricardo Uribe, from high school—and it turned out Uribe had been working to break into avocado exporting for much of the prior two decades.

Colombia’s native avocados can be three times the size of one in a U.S. grocery store, with brighter green skins that don’t hold up well in transit. Uribe searched other Hass avocado-producing countries for varieties that could grow in Colombian soils.

By the mid-2010s Uribe’s efforts were starting to pay off, and when Chipotle reached out, he had just bought more than 2,200 additional acres to plant avocado trees. Uribe tailored his farming more closely to Chipotle’s specifications, and devised ways to ship his avocados in containers to the U.S. so they could ripen upon arrival.

Chipotle’s business transformed Cartama, helping it to become one of Colombia’s largest avocado growers and exporters.

The $64B company also found suppliers in Peru, Dominican Republic, Brazil and Guatemala (while buying every California avo they could). Still, nearly half of the fruit comes from Mexico.

However these tariffs shake out, Chipotle expects to eat the cost...which means their automated avocado machine (literally called an "Autocado") -- which reduce the time time to make a batch of guacamole from 50 minutes to 25 minutes -- will be operating at full speed. Also operating at full speed: their measuring scales that make sure you don't get a single extra shred of carnitas in your burrito bowl.

***

RIP Val Kilmer: After battling throat cancer for years, the iconic actor died at the age of 65. During the heyday of Blockbuster Home Video in the mid-1990s, the two films I probably rented the most were Heat and Tombstone. Kilmer was just unreal in those films. Heat is top 10 for me while Kilmer's Doc Holiday is one of my fave characters ever. Probably quoted "I'm your Huckleberry" in excess of 6,500x in my life (Bob Dylan was such a huge fan of Tombstone that he once met Kilmer and kept bugging him to say the lines).

Kilmer was also the unforgettable Iceman in Top Gun. Tom Cruise asked him to come back for a scene in the 2022 sequel (Maverick) and got emotional talking about how Kilmer did the role while in a diminished health state.

True Story: Kilmer's Tombstone co-star Kurt Russell used to live in Vancouver with actress Goldie Hawn in the early-2000s. I went to the movie theatre once with some friends and the star couple was at the concession stand. I walked up to Russell and said "Excuse, Mr. Russell. Tombstone was tight." He looked at me. Very confused. Kind of nodded, then grabbed Goldie's hand and sped away. Anyway, incredible film and performances.

***

Some more links for your weekend:

EssilorLuxottica is the world's largest eyewear company...the $100B+ French-Italian group owns (Ray-Bam, Oakley, Oliver Peoples) or licenses (Burberry, Armani, Brunello Cucinelli) many leading consumer brands that are useful for people trying to relive Kanye's rap verse "sunglasses and Advil, last night was mad real". The frames/fashion business is 25% of the revenue, with the lens/prescription/visioncare side makes up the other 75%. This Business Breakdowns podcast has a solid deep dive on the company.

Adolescence is the top Netflix series...the making of the show is fascinating (each of the four episodes is a single take, which required a ton of planning and logistics as shown in this behind-the-scenes video). Separately, Ian Leslie writes that while Adolescence is "impressive entertainment", he's "not sure about the social commentary".

Why LAX’s $30B Upgrade Isn’t Enough to Fix the Airport’s Traffic...WSJ on how LAX gets 96k cars a day and why the airport's horseshoe layout makes it very hard to improve the flow. Truly one of the worst airport experiences in North America (especially when you also have to pay $17 for an egg salad sandie that doesn't look quite right in one of those triangle plastic thingies).

...and here's a really good April Fool’s Prank.

This is pretty concerning.