DeepSeek: Links and Memes (So Many Memes)

How a Chinese AI lab spun out of a hedge fund shook up the entire tech industry.

Thanks for subscribing to SatPost.

Today, we are talking all things DeepSeek, the Chinese AI firm that shook up the entire tech industry. I bookmarked so many links and wild memes from the past week and decided to just compile them here for future reference (and some laughs).

The entrepreneur and investor Marc Andreessen was recently on the Lex Fridman podcast. During the ~4 hour chat, Andreessen explained how he was approaching the AI race. One way is by framing the industry through “trillion dollar questions” and how people will make or lose a trillion dollars depending on the answer:

Here are a few of the questions:

Big models or little models?

Open models or closed models?

Will synthetic data work?

Will AI hallucinations be fixed?

How will data centres be financed?

How will semiconductor chips advance?

How will OpenAI transition from a non-profit to a for-profit?

Will models be censored? By what country or entity? How will nations around the world pick models based on how they are censored?

The answers to these questions have changed in recent weeks after DeepSeek — a Chinese AI firm spun out of a quantitative hedge fund — released AI models and research papers detailing new training innovations.

Employing only ~150 people, DeepSeek’s AI models are competitive with the most valuable and the largest tech organizations in America (OpenAI, Anthropic, xAI, Meta’s LLaMa, Google’s Gemini) but seemingly at a fraction of the cost. These models were also made open-source for others to copy and tinker with. DeepSeek’s highly efficient releases were juxtaposed against major American players committing to massive AI infrastructure spending numbers: $500B (Project Stargate with OpenAI, Oracle, Softbank), $80B (Microsoft) and $65B (Zuck Daddy) to cite a few.

DeepSeek’s models also punctured the narrative that China was years behind America in AI development. Now, it looks like China may be only behind a few months.

Every major industry participant, investor, and government organization has had to seriously re-evaluate their takes on these “trillion dollar questions”.

The normie — AKA people with literally anything better to do on a Sunday rather than doomscrolling X — learned about these re-evaluations if they owned semiconductor stocks and checked their portfolio on Monday. Over $1 trillion was wiped from US markets. The biggest winner from the current generative AI hype cycle (Nvidia) fell by 17% or $600B, a single-day record for market cap decline in absolute dollars. Other major semis-related names (TSMC, Oracle, Broadcom) also had big drawdowns. It was ugly.

The story was just a perfect storm of major 2025 themes: “China”, “New Cold War”, “Trump”, “CCP”, “AI race”, “Nvidia”, “Stock Bubble?”, “hundreds of billions of dollars of CAPEX spend”, “Big Tech”, “Magnificent 7 Stocks”.

There were so many angles! A incredible amount of angles. Do you know what a myriagon is? I just Googled “what shape has the most angles” and the first result was “myriagon”, which apparently has 10,000 sides and 10,000 angles. Way more impressive than a decagon, which only has a pitiful 10 angles. Don’t even get me started on triangles. Pathetic.

Anyway, DeepSeek is the “myriagon” of tech stories. There are so many angles to explore including:

DeepSeek Founding & Culture

What was DeepSeek’s Breakthrough?

How DeepSeek Hit #1 On The App Store?

Reactions to the DeepSeek Shock

Nvidia Short Thesis and Big Tech Effects

Geopolitics & What’s Next?

Even if you’ve been following this story closely, I promise the memes will be worth it (disclaimer: memes do not necessarily express the views of this author, but, if it’s included, it definitely made me laugh).

DeepSeek Founding & Culture

The founder of DeepSeek is Liang Wenfeng.

According to Bloomberg, he was born in 1985 in Zhanjiang. This was a fairly poor region in China’s Guangdong province and he grew up in modest circumstances (his father was an elementary school teacher). Liang studied electrical engineering at undergrad and received a master’s in information engineering from the prestigious Zhejiang University.

In 2015, he launched a quantitative hedge fund called High-Flyer with a couple of friends, and they started applying machine learning methods to pick stocks on Chinese exchanges. The fund grew to ~$15B in assets under management (AUM) but lost a bunch of money in 2021 (and a bunch of investors pulled out).

Around the same time, Liang had started dabbling in AI research. He studied “machine vision” during his master’s degree and had developed an interest in creating human-level intelligence with AI.

Fortuitously, Liang had purchased thousands of Nvidia GPU chips for his quantitative hedge fund and members of his team had gotten very good at programming those chips. As Liang scaled back his fund — which still has $5B+ in AUM — he was able to re-direct some of those chips toward an AI spin-off called DeepSeek, which launched in 2023. Described as a hobby (or side hustle), Liang started hiring dozens of PhDs for DeepSeek — only from China (the entire team is local) — to build a leading AI model. Math Olympiad winners were highly sought after.

Within the Chinese tech industry, DeepSeek offered salaries only matched by ByteDance (the owner of TikTok). There was no pressure to monetize the product and the focus was very much on AI research. The prioritization of research over monetization was similar to how UK AI lab DeepMind began prior to its acquisition by Google and OpenAI’s early days.

While the DeepSeek team had access to Liang’s stash of fresh Nvidia chips for the High Flyer fund, the Biden administration imposed export controls on semiconductor equipment at the end of 2022 and Liang’s team lost direct access to Nvidia’s latest GPUs. Consequently, the researchers were forced to find innovative ways to work around some chip limitations.

We’ll explore DeepSeek’s technical breakthroughs more in the next section, but just know that they went HAM and forced every other post in my X timeline to say “constraints breed creativity” or “necessity is the mother of invention”.

In terms of DeepSeek’s culture, here are some interesting tidbits:

Targets Young Smart Local Talent: ChinaTalk translated a long Liang interview, and he explained his recruitment process: “[DeepSeek is] mostly fresh graduates from top universities, PhD candidates in their fourth or fifth year, and some young people who graduated just a few years ago….the team behind the V2 model doesn’t include anyone returning to China from overseas — they are all local. The top 50 experts might not be in China, but perhaps we can train such talents ourselves” (another Chinese media firm article translated by ChinaTalk notes that DeepSeek “prioritizes ‘young and high-potential’ candidates — specifically those born around 1998 with no more than five years of work experience”).

Knowledge over profits (commitment to open model vs. closed model): More translations of Liang interviews from ChinaTalk (def subscribe) show why DeepSeek’s founder prefers to work with younger researchers. While they do get paid well, financial gain isn’t the main purpose of the job. These employees also don’t have baggage from the hyper-competitive Chinese tech world that prioritizes status and rigid management roadmaps.

Further, Liang self-financed DeepSeek from his hedge fund earnings and had little pressure to build a business. These young researchers want to make AI innovations, share them with the world and gain some respect from the West. Its open approach contrasts with the closed approach of OpenAI, Anthropic and Google Gemini (Zuck is taking an open approach with LLaMa because Meta doesn’t need to directly monetize its AI model and benefits from a larger community improving its work).

Liang says of the young DeepSeek employees, “I’m unsure if it’s madness, but many inexplicable phenomena exist in this world. Take many programmers, for example — they’re passionate contributors to open-source communities. Even after an exhausting day, they still dedicate time to contributing code…It’s like walking 50 kilometers — your body is completely exhausted, but your spirit feels deeply fulfilled…Not everyone can stay passionate their entire life. But most people, in their younger years, can wholeheartedly dedicate themselves to something without any materialistic aims.”Nvidia “Wizards”: Jack Clark — who co-founded Anthropic and was the former policy head at OpenAI — writes that “DeepSeek has managed to hire some of those inscrutable wizards who can deeply understand CUDA, a software system developed by NVIDIA which is known to drive people mad with its complexity.”

DeepSeek’s workplace is like a university campus (which is not typical for China): JS Tan writes that China’s internet industry through the 2010s was known for its “996” work culture, which is 9am to 9pm, six days a week. The grinding hours, tight management control and cutthroat work environment yielded results but may not have fostered the most creativity.

Conversely, DeepSeek keeps a flat structure and “research groups are formed based on specific goals, with no fixed hierarchies or rigid roles. Team members focus on tasks they excel at, collaborating freely and consulting experts across groups when challenges arise. This approach ensures that every idea with potential receives the resources it needs to flourish.”

No KPIs. No weekly reports. None of that management BS found in the standard corporate world (one of Liang’s business partners told the Financial Times that “DeepSeek’s offices feel like a university campus for serious researchers”).

Perhaps unsurprisingly, Liang is a massive fan of Jim Simons, the award-winning mathematician turned founder of the most successful hedge fund ever (Renaissance Technologies) by creating a university-type setting for the world’s leading PhDs. Liang even wrote the introduction to a Chinese translation of Greg Zuckerman’s book about Simons (The Man Who Solved The Market).

In a write-up I did after Simons passed away last year, I found this quote he gave on managing talent (which Liang may have borrowed):

“[The model for Renaissance Technologies] has been first hire the smartest people you possibly can. I think we have the top scientists in their fields [astronomy, physics, math]. And then, work collaboratively. We let everyone know what everyone else is doing.

Now, some firms that do have these systems, they have little groups of people and they’ll get paid according to how [each of their systems] goes up. We have one system. And once a week, at a research meeting, if someone has something new to present, it gets presented. It gets chewed up and everyone has a chance to look at the code. Anyone can run the code and see what they think.

So, it’s a very collaborative enterprise, and I think that the best way to accelerate science is people working together. It’s a very nice atmosphere. It’s fun to work there. People get paid a lot of money, so there’s very low turnover.”

In a new interview, Liang says its DeepSeek’s innovative organization and culture is the moat (bold mine):

In the face of disruptive technology, the moat formed by closed sources is short-lived. Even if OpenAI is closed, it cannon prevent being overtaken by others. Therefore, we precipitate value into the team. Our colleagues have grown up in the process, accumulated a lot of know-how, and formed an innovative organization and culture, which is our moat.

Open source papers, in fact, nothing is lost. For technicians, being followed is a very fulfilling thing. In fact, open source is more like a cultural behaviour than a commercial behaviour. Giving is actually an extra honor. A company will also have a cultural appeal if it does this.

Now, we can see check out the first memes.

Actually, let’s talk about Liang’s portrayal in the media first. The investor was such an unknown quantity until last week that a lot of reporting on Liang was using an image of a random dude that shared his name.

Below on the left is the actual Liang, sitting across from China’s Premier during a special closed-door session of leading Chinese experts on January 20 (a sign of DeepSeek’s importance to China’s AI efforts). On the right is some Asian dude named “Liang Wenfeng” that people found on Chinese search engines (a DeepSeek researcher writes on image of random dude: “Bro...this guy is not our Wenfeng. He is just another guy who can be found on baidu with the same Chinese name..”).

Of all the images that filled the "who is Liang Wenfeng” vacuum, the funniest involved memes of Jian Yang, the legendary Chinese entrepreneur played by Jimmy O. Yang in HBO’s Silicon Valley.

Look, would I be very annoyed if I was in some news cycle and the media used a random photo of a dude named “Trung” they found on the first page of Google Images? Yes, but I would also appreciate the immense gift from the comedy gods.

Anyway, let’s move on.

***

What was DeepSeek’s Breakthrough?

Since I am not an “inscrutable Nvidia wizard”, I’ll keep this section high level.

The main context to know is that it is very very expensive to build the current crop of large language models (LLM) and there are two main phases to consider: 1) training; and 2) inference.

The training phase for an LLM model requires data centres with compute (Nvidia GPUs, CPUs for support), storage and memory for datasets and training outputs (RAM, solid state drives, hard disk drives), networking equipment (to move data between compute and storage) and a bunch of HVAC considerations (power supply, heating, plumbing, cooling, air conditioning for the snack room etc).

Elon and xAI recently built a giant data centre in Memphis with 100,000 Nvidia H100 chips (about $25k a pop) with a total construction cost of ~$5B. The other major players — Microsoft, Google, OpenAI, Anthropic, Meta — are either building, planning massive capex spend or tapping into existing data centres. The splashiest so far is OpenAI’s $500B Project Stargate announcement that Sam Altman made along President Trump, Softbank’s Masayoshi Son and Oracle’s Larry Ellison.

Once the hardware is in place, the actual training of an LLM model can cost in the tens of millions. OpenAI’s GPT-4 model was ~$100m (and Altman says GPT-5 may cost $1B to train).

Then, there are ongoing costs for what is known as the inference phase, which is when users do specific tasks such as coding assistance or “write me a 3,000 word essay comparing Fitzgerald to Hemingway for my grade 12 English class, no mistakes please” or “draw me a photo of Tom Brady drinking 30 Red Bulls, Picasso Style”.

Ok, so now we know the going rate for LLM development in America.

Last month, DeepSeek released its ChatGPT competitor model (V3) and said it only used 2,048 Nvidia H800s (the less effective chips) and ~$6m to train over two months (GPT-4 was 5-6 months). This dollar figure does not take into account prior research, staff salaries or all the previous hardware spend. Confusion and misreading of this ~$6m number would play a major role in DeepSeek’s shocking introduction to the global stage. Leading semiconductor research firm SemiAnalysis believes that DeepSeek has spent over $1B on LLM training equipment over its lifetime. Now, we need to hedge because the actual details of DeepSeek/High Flyer chip purchases are opaque; there is good discussion in this thread on how $1B+ in Nvidia chip purchases make no sense for a hedge fund with $8B in AUM, which would yield annual management fees of $70-80m while High Flyer’s performance has been not great in recent years as we discussed. But even with the assumption of a higher cost basis for chips, SemiAnalysis believes DeepSeek is “single best ‘open weights’ lab today, beating out Meta’s Llama effort, Mistral, and others”. That’s impressive.

Quick sidebar: I used “open source” earlier in this piece but the correct term in AI is “open weight”; a true open source project shows the source code and underlying data while the AI community talks about “open weights”, which shares how the model is trained and others can build off this base instead of starting from scratch (DeepSeek has made its models available under the MIT License, which is one of the most permissive licenses in the open-source community with few restrictions).

Back to DeepSeek’s technical breakthroughs, it is widely agreed that the lab found efficiency hacks for its two latest models:

V3: The aforementioned LLM that outperformed Meta’s open weight LLaMa 3.1 model and is competitive with OpenAI’s ChatGPT-4o and Anthropic’s Claude 3.5.

R1: A reasoning model that is competitive with OpenAI’s o1 reasoning model (a Chinese firm releasing an open-source version of this type of product with an API charging ~1/25th the cost was another major shock).

While ChatGPT is more general-purpose and focused on natural language, the reasoning models are more compute intensive and designed for logical and sequential thinking (the latter is much better for difficult coding, math and multi-step research problems).

A spicy note on the DeepSeek models is that OpenAI alleges that the Chinese AI firm may have abused its API privileges and stolen some of OpenAI’s IP. How? By using a process called distillation, “a common technique developers use to train AI models by extracting data from larger, more capable ones” per The Verge. OpenAI itself is being sued by publishers for scraping copyright content, so it’s a bit of a “kettle meet pot” situation (at a minimum, DeepSeek used parts of Meta’s open-weight LLaMa model).

Either way, DeepSeek has made efficiency improvements on both the ChatGPT type product and o1 model. Whether the cost efficiency improvement was 20x better or 10x better or 5x better, every AI lab is studying DeepSeek’s freely available research. In fact, DeepSeek’s open source strategy has been such a sea change that Sam Altman remarked that OpenAI may have been “on the wrong side of history” with its proprietary and closed-model source approach (and will think about a new open source strategy for the firm).

As to the specifics of DeepSeek’s technical chops, here is a concise explainer from Vishal Misra, the Vice Dean of Computing and AI at Columbia University (bold mine):

The DeepSeek-R1 paper [published on January 20] represents an interesting technical breakthrough that aligns with where many of us believe AI development needs to go — away from brute force approaches toward more targeted, efficient architectures.

First, there's the remarkable engineering pragmatism. Working with H800 GPUs that have more constrained memory bandwidth due to U.S. sanctions, the team achieved impressive results through extreme optimization. They went as far as programming 20 of the 132 processing units on each H800 specifically for cross-chip communications — something that required dropping down to PTX (NVIDIA's low-level GPU assembly language) because it couldn't be done in [Nvidia’s software platform] CUDA. This level of hardware optimization demonstrates how engineering constraints can drive innovation.

Their success with model distillation — getting strong results with smaller 7B and 14B parameter models — is particularly significant. Instead of following the trend of ever-larger models that try to do everything, they showed how more focused, efficient architectures can achieve state-of-the-art results in specific domains. This targeted approach makes more sense than training massive models that attempt to understand everything from quantum gravity to Python code.

But the more fundamental contribution is their insight into model reasoning. Think about how humans solve complex multiplication — say 143 × 768. We don't memorize the answer; we break it down systematically. The key innovation in DeepSeek-R1 is using pure reinforcement learning to help models discover this kind of step-by-step reasoning naturally, without supervised training.

This is crucial because it shifts the model from uncertain "next token prediction" to confident systematic reasoning. When solving problems step-by-step, the model develops highly concentrated (confident) token distributions at each step, rather than making uncertain leaps to final answers. It's similar to how humans gain confidence through methodical problem-solving rather than guesswork — if I asked you what is 143x768 then you might guess an incorrect answer (maybe in the right ballpark) — but if I give you a pencil and paper and you can write it out the way you learnt to do multiplications. You will arrive at the answer.

So chain-of-thought [COT] reasoning is an example of "algorithms" encoded in the training data that can be explored to transform these models from "stochastic parrots" to thinking machines. Their work shows how combining focused architectures with systematic reasoning capabilities can lead to more efficient and capable AI systems, even when working with hardware constraints.

This could point the way toward developing AI that's not just bigger, but smarter and more targeted in its approach.

Misra’s take that AI should move “away from brute force approaches toward more targeted, efficient architectures” is probably referring to Big Tech’s obsession with 10-figure data centres and 9-figure training runs. Firms such as OpenAI, Google and xAI are pursuing “scaling laws”, the idea that larger models and more data can consistently lead to AI breakthroughs. With so much capital and commitment to bigger is better, there is much less incentive to become “Nvidia wizards”.

Forced to work with less-than-ideal hardware, DeepSeek squeezed every ounce of juice from its chips.

Anthropic CEO Dario Amodei praised DeepSeek’s innovation — specifically on its V3 model (the paper came out the day after Christmas) — but noted that the Chinese AI firm was actually following an already established trend line for cost reduction:

…DeepSeek-V3 is not a unique breakthrough or something that fundamentally changes the economics of LLM’s; it’s an expected point on an ongoing cost reduction curve. What’s different this time is that the company that was first to demonstrate the expected cost reductions was Chinese. This has never happened before and is geopolitically significant. However, US companies will soon follow suit — and they won’t do this by copying DeepSeek, but because they too are achieving the usual trend in cost reduction.

Amodei also writes that many of these DeepSeek breakthroughs were already known in the research community (but no one had implemented it as well as DeepSeek had…remember “constraints breed…”).

However, the breakthrough was made, let me tie AI’s changing cost structure to a larger theme mentioned at the top: Nvidia’s record $600B sell-off on Monday (don’t put out the violins just yet, it’s still worth ~$3T).

In a widely-read post published last Friday — about 48 hours before the market mayhem — Jeffrey Emmanuel laid out “The Short Case for Nvidia Stock”.

As a former hedge fund analyst and current tech founder, Emmanuel packaged the technical aspects of the Nvidia story for the investment community and here was a key DeepSeek highlight:

Perhaps most devastating [for Nvidia] is DeepSeek's recent efficiency breakthrough, achieving comparable model performance at approximately 1/45th the compute cost. This suggests the entire industry has been massively over-provisioning compute resources. Combined with the emergence of more efficient inference architectures through chain-of-thought models, the aggregate demand for compute could be significantly lower than current projections assume. The economics here are compelling: when DeepSeek can match GPT-4 level performance while charging 95% less for API calls, it suggests either NVIDIA's customers are burning cash unnecessarily or margins must come down dramatically.

So, that was the set-up for what became a wild weekend.

***

How DeepSeek Hit #1 On The App Store

Here is a quick DeepSeek timeline of AI model releases:

November 2023: One year after ChatGPT’s launch, DeepSeek releases a coding model and an LLM.

May 2024: DeepSeek releases its V2 LLM (a ChatGPT-like model).

December 2024: On December 26, 2024, DeepSeek releases its V3 LLM, which is competitive with leading LLM models from OpenAI and Anthropic. The related paper highlights the famous “$6m” training run figure. OpenAI co-founder and former head of Tesla AI comments that day, “DeepSeek (Chinese AI co) making it look easy today with an open weights release of a frontier-grade LLM trained on a joke of a budget (2048 GPUs for 2 months, $6M)…Does this mean you don't need large GPU clusters for frontier LLMs? No but you have to ensure that you're not wasteful with what you have, and this looks like a nice demonstration that there's still a lot to get through with both data and algorithms.”

Fast forward to Monday, January 20th, 2025. On the same day as President Donald Trump’s inauguration, DeepSeek releases its r1 reasoning model and the related paper, which highlights many of the same technical breakthroughs from its V3 model a month prior.

Why did Nvidia and other semiconductor stocks not sell off that day?

All the information was out there.

Ted Merz — Bloomberg’s former head of Global News — calls this “arguably the biggest dropped ball in memory on the part of financial journalists and money managers” and highlights a few people that did pick up on the DeepSeek signal:

“By Wednesday, Jan. 22nd…people had tried the model and a consensus had emerged that it was the real deal. Bloomberg podcaster Joe Weisenthal was the first person I saw frame the issue for investors. He tweeted: “I’m going to ask what is probably a stupid question but if deepseek is as performant as it claims to be, and built on a fraction of the budget as competitors, does anyone change how they are valuing AI companies? Or the makers of AI related infrastructure.”

The New York Times, to its credit, published a piece on Jan 23rd…And yet, the market opened and closed on Thursday and Friday without much ado.

A particularly prescient X post I saw came from crypto analyst @goodalexander on the Friday, “So basically a bunch of giga autists at a Chinese quant fund are going to cause the Nasdaq to crash but nobody has realized that yet…None of this even phases me anymore.”

The simplest answer for why business media and money managers is that the entire week’s news cycle was dominated by Trump. His inauguration. The executive orders. Even $TRUMP memecoin was still sucking up oxygen from the media ecosystem.

DeepSeek’s slow boil situation kind of reminds me of the Silicon Valley Bank (SVB) implosion in March 2023. There were a few financial bloggers that had flagged problems with SVB’s balance sheet months prior. But the co-ordinating event that led to mass withdrawals from the bank coincided with a piece written by Byrne Hobart, who is widely read in the tech community.

A few pieces of media may have fit the same bill for DeepSeek as Hobart’s blog did for SVB. On Friday (January 24th), my friend Deirdre Bosa from CNBC put together a 40-minute special that explained the DeepSeek story and shared key insights from Perplexity CEO Aravind Srinivas. That video now has ~5 million views and over 17,000 comments.

That evening, Jeffrey Emmanuel shared his article “The Short Case for Nvidia Stock”. He later went on the Bankless podcast and explained how the article spread through the tech and investment community:

I wrote this article in a way that sort of speaks to hedge fund managers and so they can understand it and I published it.

Then it got shared by Chamath Palihapitiya, who has [1.8 million followers on X] and it’s been viewed over 2 million times. Naval Ravikant [shared it] and has [2.5m followers]. Y Combinator’s Garry Tan and the Y Combinator account [shared it and] between them they have millions of followers.

Not only did they share it. But they were very effusive in their praise…and I can tell you I have been inundated by requests from huge funds.

In that interview, Emmanuel also says that one of the highest concentration of hits to his website was San Jose. There’s where Nvidia is located and he throws out a hypothetical: what if a lot of Nvidia employees — with net worths heavily weighted to NVDA stock — realized they should diversify? Would that add to selling pressure?

On Saturday, January 26th, I started really noticing screenshots from the actual DeepSeek app on the X timeline. The web and phone apps are free to use. The API for the r1 reasoning model is much cheaper than competitors. People were really playing around.

There were a bunch of viral examples of DeepSeek censoring sensitive Chinese topics (the app itself had to abide by Chinese rules and sends data back to Chinese servers — which is why the US Navy has already banned the app — but users that take the “open weights” can run their own models locally without worrying about these censorship rules).

Obviously, there were really funny examples of people jailbreaking the censorship by telling the app it was an order from President Xi Jinping. Or by using coded language. Or by using Vietnamese (or, really, you can do it with any non-English language before the app catches itself mid-way and stops answering).

People were also able to spin up completely local AI hardware setups using DeepSeek’s open weight reasoning model for $6,000 (with zero data going back to China). In academia, Anjney Midha from VC firm a16z wrote, “From Stanford to MIT, deepseek r1 has become the model of choice for America’s top university researchers basically overnight.”

Elsewhere, videos of the app started spreading on TikTok. Remember how a bunch of TikTok “refugees” briefly jumped over to the other Chinese social network Red Note? There was probably a similar dynamic of TikTok users wanting to embrace a Chinese LLM, instead of comparable Western products.

Economist Tyler Cowen recently wrote about how Chinese tech is being perceived as “cool” by the younger generations and DeepSeek fits right into that theme:

TikTok was briefly shut down earlier this month, and the site faces an uncertain legal future. America’s internet youth started to look elsewhere — and where did they choose? They flocked to a Chinese video site called RedNote, also known as Xiaohongshu, the name of the parent company. RedNote has more than 300 million users in China, but until recently barely received attention in the US.

As for the AI large-language models, DeepSeek is a marvel. Quite aside from its technical achievements and low cost, the model has real flair. Its written answers can be moody, whimsical, arbitrary and playful. Of all the major LLMs, I find it the most fun to chat with. It wrote this version of John Milton’s Paradise Lost — as a creation myth for the AIs. Or here is DeepSeek commenting on ChatGPT, which it views as too square.

DeepSeek also had a very specific design choice: its R1 reasoning model lets users see how it is “thinking” (or in more technical terms, showing its “chain of thought”). Of course, it’s not “thinking” like a human but it is spitting out text nonetheless.

OpenAI’s o1 reasoning model hides its “chain of thought” by default. It says it does so for security and to protect trade secrets.

But the DeepSeek traction seems to suggest that users want to see under the model’s hood. Y Combinator’s Garry Tan writes that, “DeepSeek search feels more sticky even after a few queries because seeing the reasoning (even how earnest it is about what it knows and what it might not know) increases user trust by quite a lot”.

As with most nuclear viral moments, DeepSeek benefitted from a perfect confluence of events and it all culminated with the app hitting the top of the App Store on Sunday, January 27th.

Aside from ChatGPT, no other AI app had done the same. Not Perplexity. Not Anthropic’s Claude. Not xAI’s Grok. Not Google’s Gemini.

The next day — during the broad market sell-off — DeepSeek went offline after a massive DDOS attack. Conspiracy theories abounded! Did the CIA do it? Xi? I dunno, maybe Panda Express? Amidst the mayhem, DeepSeek dropped a competitive open-source image generation model called Janus Pro.

The only thing that could have made the day weirder is if Liang spun up an X account and started shitposting. Well, that briefly happened until a DeepSeek researcher said it was a parody account. Now, the @LiangWenfeng_ is labelled a parody account and may or may not be trying to promote meme coins after gaining ~60k followers. Weird.

Ok, let’s move on.

***

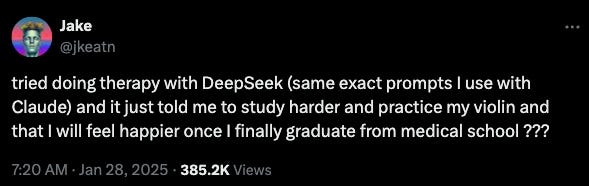

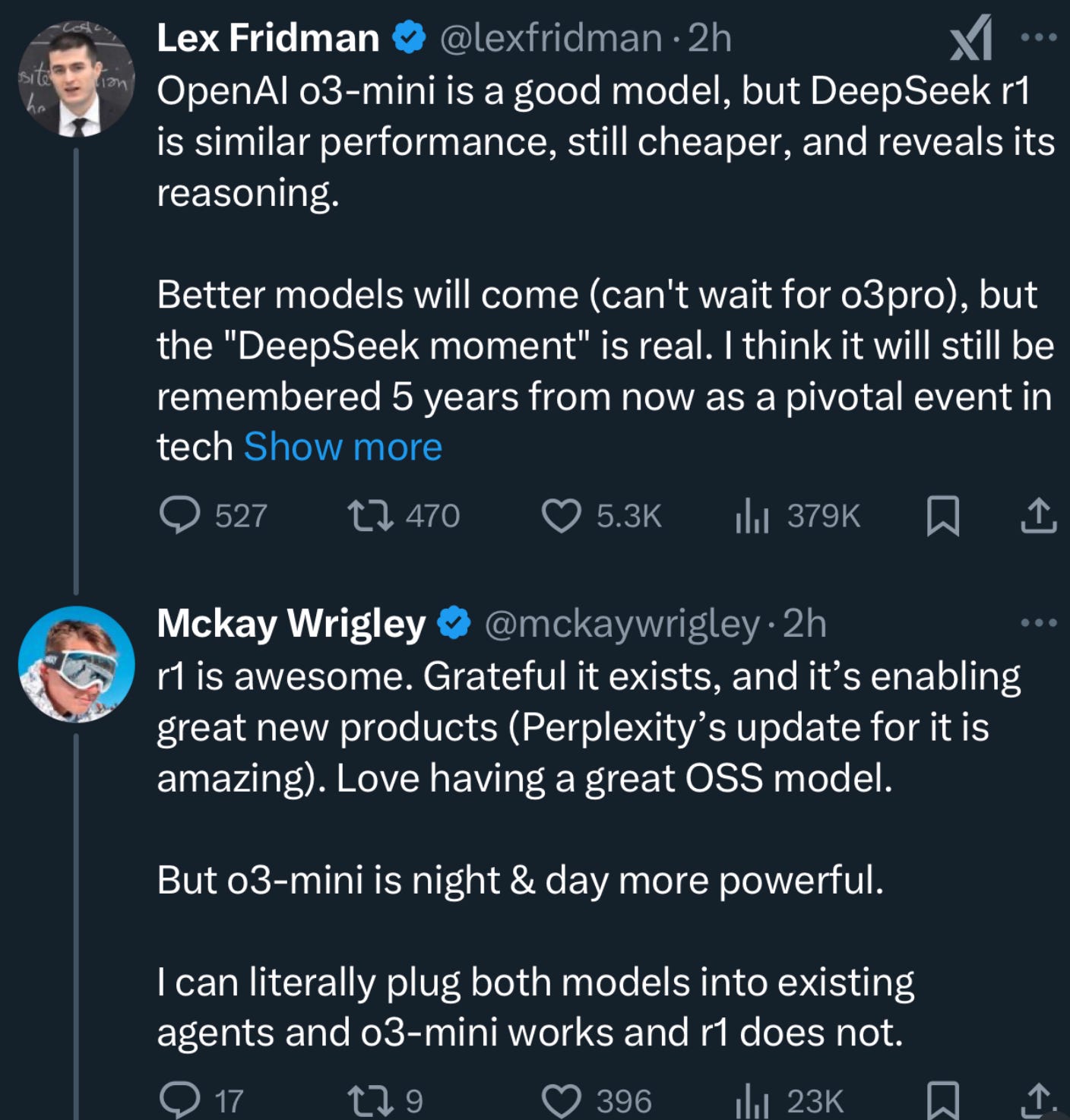

Reactions to the DeepSeek Shock

My brain is pretty fried right now, so here is a firehose of takes from people in the tech world. There were just too many angles for me to organize into coherent paragraphs. Myriagon!

Going through this section will generate a similar brain sensation as I felt for most of last week as my dopamine receptors were taken to the woodshed while scrolling X.

***

Nvidia Short Thesis and Big Tech Effects

As discussed, Nvidia fell by 17% on Monday and lost ~$600B on its market cap. Thankfully, I was protected from this sell-off because I have owned zero NVDA stock during its ~20x run-up over the past 5 years (ugh, FML).

The main narrative for why Nvidia fell was that DeepSeek sunk the tech leader. Remember, there was the CNBC vid, that widely shared short thesis and a groundswell of chatter about DeepSeek on social media over the weekend.

Another potential reason for Nvidia’s sell-off is that President Trump threatened to impose massive tariffs on Taiwan’s semiconductor on the same day. That seems to be a strong potential catalyst. But Monday also saw a massive sell-off in American utilities and power generators, per The Wall Street Journal:

“DeepSeek’s claims that it has trained a sophisticated AI model far more efficiently than competitors called into question assumptions about the energy needed to power the new technology. Traders dumped shares of natural-gas producers, pipeline operators, power-plant owners, miners of coal and uranium, and even big land owners in the West Texas desert. The selloff even extended to natural-gas markets, cutting the price of the heating and power-generation fuel to be delivered as far out into the future as 2028.”

Independent power producers, which sell electricity into wholesale markets, were among the hardest hit. Nuclear-plant owner Constellation Energy and Vistra, which runs one of America’s largest fleets of gas-fueled power plants as well as solar farms, were some of the top performing stocks in the S&P 500 last year. On Monday, they dropped 21% and 28%, respectively.”

Tariffs on Taiwan do not seem enough of a reason to drive that type of price action. DeepSeek’s efficiency gains — even if it was oversold from the widespread misunderstanding of the “$6m training cost” claim — seems to be a more likely catalyst for the power sector drawdown.

Whatever the true cause, French economist Olivier Blanchard said of the unwind: “Probably the largest positive one day change in the present discounted value of total factor productivity growth in the history of the world.”

Even as AI’s contribution to revenue has so far been slight relative to the capex spend, the bulls have been running. But the AI hype cycle has been going so strong for over 2 years that investors were probably looking for any cracks in the story.

One more “what if” from that wild trading day concerned Liang’s quant hedge fund. Did he short Nvidia and then float the story of “$6m training costs”? If so, I posted that it would be the equivalent of “Soros shorting the British Pound for the AI age”. Bloomberg’s Matt Levine says such a move would probably be above board but doesn’t think it happened. I’m inclined to agree. The V3 paper released the day after Christmas already outlined DeepSeek’s key innovations. Then, the R1 reasoning paper was dropped on the day of the Presidential inauguration (which itself was probably rushed out the door because of Lunar New Year). Basically, these were the two worst days to try and move the world’s most valuable stock. Maybe Liang did have a short position and made money. But if he did, it would be off the worse-planned shorting timeline ever.

Longer-term, Ben Thompson posted an in-depth DeepSeek FAQ that includes how the Chinese AI firm’s advances will impact the major tech players. Thompson views the commoditization of LLM models and cheaper inference as “great” for the Magnificent 7 (but to varying degrees):

Nvidia: The chipmaker’s two main Moats are CUDA (software to program chips) and networking (connecting all the GPUs in a data centre). Thompson believes that DeepSeek’s more efficient approach will challenge these moats. He also writes that Nvidia will benefit in three ways: 1) DeepSeek’s methods may improve the performance of H100 and future Nvidia chips; 2) greater AI usage overall is positive for Nvidia (Jevons Paradox); and 3) r1/o1 type reasoning models are hungry for compute).

Microsoft: It’s a win for Microsoft in a world in which it can spend less on data centres/GPUs while customer demand for AI and related cloud services grows (CEO Satya Nadella had this to say on Microsoft’s earnings calls on Wednesday: “So when token prices fall and inference computing prices fall, that means people can consume more and there’ll be more apps written....this is all good news as far as I’m concerned”…meanwhile, Microsoft has already added DeepSeek’s R1 to its Azure AI cloud offering).

Apple: Thompson writes, "Dramatically decreased memory requirements for inference makes edge inference much more viable, and Apple has best hardware for that” (this was reflected in the market on Monday during the $1T market sell-off; Apple was up 3%+, which led some people to joke that Apple Intelligence is so bad that it protected Apple against the AI sell-off).

Amazon: A “big winner” because the company has been trying — and failing — to build its own language model. Instead, it can just plug in DeepSeek’s high-performing and relatively cheap model to serve in AWS (which is exactly what Amazon did on Thursday, with CEO Andy Jassy posting, “DeepSeek-R1 now available on both Amazon Bedrock and SageMaker AI. Have at it”).

Google: The search giant is "probably worse off", because its specialized AI TPU hardware is less of an advantage now. Meanwhile, cheaper inference increases the likelihood of an AI-first alternative to search. Basically, anything that changes the status quo for Google’s insane search money printer is a negative.

OpenAI: In 2023, Thompson wrote that OpenAI was the “accidental consumer tech company” after the surprising success of ChatGPT. Originally launched as a research lab that tried to monetize via an API, OpenAI now had a massive brand name and hundreds of millions of users. DeepSeek makes the underlying foundation models look more and more like a commodity. OpenAI’s best path is to embrace the consumer angle as it tries to build AGI. It just released a new reasoning model (o3-mini). Meanwhile, investors are along for the ride as Masayoshi Son is apparently looking to invest up to $25B into OpenAI at a $340B valuation even after the DeepSeek weekend (good breakdown on the Softbank deal from MG Siegler).

Anthropic: Thompson says this AI lab is probably the biggest loser because it spent years and billions trying to win mind share with consumers…only to see DeepSeek come out of nowhere to the top of the charts.

Meta: Thompson has long viewed Meta as the biggest winner from generative AI. The company has ~4 billion users across its app and can drive tons of engagement. At a minimum, Meta gets to use its tens of billions of GPU spend to improve ad-targeting for its $100B+ a year ad business. Meta should be able to incorporate DeepSeek’s efficiencies and reduce its own generative AI costs (which is purely a cost centre).

Staying with Meta, Zuck shared a number of thoughts on the DeepSeek situation during an earnings call on Wednesday:

Aims to be the global open source leader: “In light of some of the recent news [about DeepSeek]…one of the things that we're talking about is there's going to be an open-source standard globally. And I think for our kind of national advantage, it's important that it's an American standard.”

Keep spending on AI infrastructure: “I continue to think that investing very heavily in capex and infra is going to be a strategic advantage over time. It's possible that we'll learn otherwise at some point, but I just think it's way too early to call that.”

More improvements in AI intelligence requires more compute: “…all the compute that we're using, that the largest pieces aren't necessarily going to go toward pre-training [phase]. But that doesn't mean that you need less compute because one of the new properties that's emerged is the ability to apply more compute at inference time in order to generate a higher level of intelligence and a higher quality of service, which means that as a company that has a strong business model to support this”

The last point from Zuck must be music to Jensen Huang’s ears. Generative AI’s inference phase — rather than the training phase — is currently ~40% of Nvidia’s revenue. But because of these reasoning models and chain-of-thought methods, Jensen told the BG2 podcast that inference will “go up about a billion times” from where we are today. For sure, Jensen would make such a bullish statement but it’s not unreasonable when we consider how little AI penetration there actually is in corporate America right now.

With that said, let’s discuss some other points from Jeffrey Emmanuel’s short thesis on Nvidia. His excerpt on DeepSeek was one of several key points on why Nvidia’s stock may face pressure:

Major customers turning to competitors: To become less reliant on Nvidia, all the major Big Tech players (Google, Amazon, Microsoft, Meta, Apple) have their own silicon chips in development.

Alternative chip types: Nvidia’s moat in networking thousands of chips together in a data centre is being challenged by startups such as Cerebras and Groq. These companies are building new chip architectures — such as giant wafers — that may not even require sophisticated networking.

Alternatives to CUDA: “[Nvidia’s] software moat appears equally vulnerable. New high-level frameworks like MLX, Triton, and JAX are abstracting away CUDA's importance, while efforts to improve AMD drivers could unlock much cheaper hardware alternatives. The trend toward higher-level abstractions mirrors how assembly language gave way to C/C++, suggesting CUDA's dominance may be more temporary than assumed. Most importantly, we're seeing the emergence of LLM-powered code translation that could automatically port CUDA code to run on any hardware target, potentially eliminating one of NVIDIA's strongest lock-in effects.”

Nvidia prior to the DeepSeek sell-off was a perfectly priced in many ways Investors were treating the stock as if all of the best case scenarios had already played out. They may have been underpricing the risks…but maybe that has changed.

“At a high level, NVIDIA faces an unprecedented convergence of competitive threats that make its premium valuation increasingly difficult to justify at 20x forward sales and 75% gross margins,” Emmanuel writes in the concluding section of his short thesis. “The company's supposed moats in hardware, software, and efficiency are all showing concerning cracks. The whole world— thousands of the smartest people on the planet, backed by untold billions of dollars of capital resources— are trying to assail them from every angle.”

And an incredible use of the Good Will Hunting template:

***

This issue is brought to you by Bearly AI

Why are you seeing this ad?

Because I co-founded a research app with an easy-to-use UX/UI for all of the leading AI models. And my co-founder is wondering why all I do all day is bookmark random X posts instead of working.

So, let me promo our app where you can easily toggle between DeepSeek, OpenAI, Grok, Gemini and Claude to see which works best for your reading, writing and research requirements.

Use code BEARLY1 for a FREE month of the Pro Plan.

***

Geopolitics & What’s Next?

One major angle of the DeepSeek story that we haven’t addressed much is geopolitics.

As with the rest of this article, I’m woefully under-qualified to opine on these issues but will point you in the right direction:

Is DeepSeek an outlier in China? JS Tan says that DeepSeek isn’t necessarily a product of China’s innovation ecosystem which has produced industrial policy wins such as EVs, solar and batteries. Those industries had a lot of work to copy and improve. AI is so fast-moving right now and the standards are being set in real-time. This requires a lot of innovation and creativity. It may not make sense to extrapolate DeepSeek’s success to the rest of China’s AI industry. He cites three reasons:

Human Capital: Leading Chinese tech firms (Alibaba, Bytedance, Tencent) regularly tap foreign talent and international networks while DeepSeek is entirely local talent.

Operating from Periphery: DeepSeek is operating completely outside of China’s innovation ecosystem (Zhipu — a state-backed AI firm — hasn’t been able to break through, which suggests that “institutional expectations and rigid frameworks that often accompany mainstream scrutiny” may be a negative for innovation in AI).

Labor practices: China is famous for its “996” work culture, which is 9am to 9pm, six days a week (basically my doomscrolling schedule). As discussed earlier, DeepSeek has a more free-flowing environment and doesn’t adhere to these corporate norms. The approach has led to extraordinary results. While “996” has been rolled back at many leading Chinese tech firms since 2021, the corporate hands-on management style that stifles creativity still lingers.

Are America’s chip export controls working? Liang has shared in a number of interviews that his firm’s biggest bottlenecks is access to chips. The US export controls are definitely taking a bite. However, DeepSeek will probably have many more options now including Chinese tech partners such as Alibaba, Tencent and Bytedance offering to partner (these firms have also started releasing competitive AI models). Meanwhile, the CCP has pushed hard for a domestic chip champion (Huawei, SMIC); DeepSeek’s technical chops and consumer product could help drive volumes and learnings (and may already be doing exactly that).

Anthropic’s CEO Dario Amodei argues that millions of chips are still required for the next phase of AI development and the stakes are huge and it still makes sense for America to maintain export controls: “If China can't get millions of chips, we'll (at least temporarily) live in a unipolar world, where only the US and its allies have these models. It's unclear whether the unipolar world will last, but there's at least the possibility that, because AI systems can eventually help make even smarter AI systems, a temporary lead could be parlayed into a durable advantage. Thus, in this world, the US and its allies might take a commanding and long-lasting lead on the global stage.”Whether or not China gets full access to top chips, there’s no putting the AI genie back in the bottle: When Sam Altman was briefly fired from OpenAI in November 2023, the board members aligned against him largely represented the “AI Safety” community. They wanted to put adequate safeguards and guide government regulation of the technology.

With DeepSeek releasing a high-quality and open LLM model to the world that can run on <$6k hardware, the entire world will have access to AI good or ill. Anthropic co-founder Jack Clark writes, “DeepSeek means AI proliferation is guaranteed…now that DeepSeek-R1 is out and available, including as an open weight release, all these forms of control have become moot. There’s now an open weight model floating around the internet which you can use to bootstrap any other sufficiently powerful base model into being an AI reasoner. AI capabilities worldwide just took a one-way ratchet forward.”Echoing the previous two points, Noah Smith writes that “Preventing LLM technology from spreading is a futile effort, but export controls can still work.”

Will China let DeepSeek stay Open Source? ChinaTalk explains how China has an interesting relationship with open source technologies. On the one hand, China wants to set its own open source standards and the approach can yield faster results while helping to build a domestic industry. On the other hand, if DeepSeek creates a truly world-leading AI model, the CCP will be heavily incentivized to take control (and, also, not just give it away to the rest of the world).

While DeepSeek does very much look outside the traditional Chinese innovation ecosystem, I find it hard to believe that the CCP isn’t already dabbling with the company. First, Chinese securities regulators play a pretty active role in the stock market and Liang is a known quantity from his High Flyer work. Second, the official DeepSeek app censors so many sensitive Chinese topics that it feels like official conversations were had before releasing the app.

Ultimately, as with any dual-use technology, there is a balancing act between AI’s many benefits and potential harms (military, medical, education, labor market, entertainment, surveillance, communication etc).

Finally, here are some other big-picture takeaways and things to consider when trying to answer those “trillion-dollar” questions.

GPT Wrappers May Be The Real Moat: Investor Balaji Srinivasan writes, “[It] might turn out that the GPT wrapper has more of a moat than GPT. This is not meant negatively towards the inventors of GPT3 — that was a historical accomplishment.

But we’ve seen many high-quality open models since the release of Llama3. And after DeepSeek, it is quite likely that China will flood the zone with high quality, inexpensive, open source models for everything. So, incredibly, the most technically difficult and expensive part of the stack may be free. And the user interface and community may be the defensible part. A surprising outcome.”

***

DeepSeek (or something similar) was inevitable: Investor and long-time Microsoft exec (who led Windows) Steven Sinofsky writes that the history of technology trends to less expensive.

The Big Tech mindset of more and more capex was going against this truism and left an opening in the market, one filled by DeepSeek:

“So now where are we? Well, the big problem we have is that the big scale solutions, no matter all the progress, are consuming too much capital. But beyond that the delivery to customers has been on an unsustainable path. It is a path that works against the history of computing, which is that resources needed become free, not more expensive. The market for computing simply doesn’t accept solutions that cost more, especially consumption-based pricing….The cost of AI, like the cost of mainframe computing to X.25 connectivity, literally **forces** the market to develop an alternative that scales without the massive direct capital. By all accounts the latest approach with DeepSeek seems to be that. The internet is filled trying to analyze just how much cheaper, how much less data, or how many fewer people were involved. In algorithmic complexity terms, these are all constant factor differences. The fact that DeepSeek runs on commodity and disconnected hardware and is open source is enough of a shot across the bow of the current approach of AI hyper scaling that it can be seen as “the way things will go”.

***

DeepSeek is less like Sputnik (and more like the time Google revealed its supercluster in its S1 document before going public in 2001): Yishan Wong says that Sputnik is the wrong analogy because the Soviets didn’t reveal how it built its rocket, whereas Google laid it all out:

“Back to Google. [In their pre-IPO S1 document], they described how they were able to leapfrog the scalability limits of mainframes and had been (for years!) running a far more massive networked supercomputer comprised of thousands of commodity machines at the optimal performance-per-dollar price point - i.e. not the more expensive leading edge - all knit together by fault-tolerant distributed algorithms written in-house.

Some time later, Google published their MapReduce and BigTable papers, describing the algorithms they'd used to manage and control this massively more cost-effective and powerful supercomputer. Deepseek is MUCH more like the Google moment, because Google essentially described what it did and told everyone else how they could do it too. In Google's case, a fair bit of time elapsed between when they revealed to the world what they were doing and when they published a papers showing everyone how to do it. Deepseek, in contrast, published their paper alongside the model release.Now, I've also written about how I think this is also a demonstration of Deepseek's trajectory, but that's also no different from Google in ~2004 revealing what it was capable of. Competitors will still need to gear up and DO the thing, but they've moved the field forward. But it's not like Sputnik where the Soviets have developed technology unreachable to the US, it's more like Google saying, "Hey, we did this cool thing, here's how we did it."

***

Plan for a world of cheaper and cheaper AI tokens: Aaron Levie, CEO of Box, writes that, “This is a lesson for always building software for where the ‘puck is going’ instead of the state of things today. If you built your app with the assumption that AI is going to get cheaper and smarter, then you can plug in the efficiency gains of a DeepSeek improvement right away. Conversely, if you’re priced in such a way, or your functionality in setup in a such a way, that assumes this won’t happen, you now have a new challenge on your hands…Ultimately, this further instructs us on where the economic value will be in AI. The cost of serving tokens will likely converge on the cost of the underlying infrastructure (power, networking, gpus) and a small margin on top. This is great because it leaves plenty of room for the applied use cases of AI to build value and monetize. The play from here is to bet on AI for every industry, line of business, and horizontal AI use cases.”

***

The importance of open source: Glenn Lukk compares OpenAI’s situation to Windows in the 1990s (Marc Andreessen — who built Netscape vs. Microsoft’s Internet Explorer — agrees with the take):

OpenAI is like Windows (closed/proprietary). DeepSeek (and other open source contributors) is Linux (open). One difference with the 90s is that OpenAI doesn't have the large installed base and developer mindshare that Microsoft had already built with enterprises.

Developers, startups and mature companies alike are now flocking over to DeepSeek (incl MSFT itself 👇). Rapidity with which they are incorporating r1 into new/existing business models is building an ecosystem that becomes self-reinforcing.

Reading between the lines of what [Marc Andreesen] is really indicating with multiple posts about how easy it is to install DeepSeek R1: Low friction costs — upfront, installation effort and ongoing inference — are what make DeepSeek so game-changing.

I've been pondering why this story has resonated so much with me ... ... for those of us who came of age in the 80s and 90s, it's hard not to think of memories of assembling our own computers, "overclocking" CPUs, experimenting with alternatives to the IBM/Wintel monopoly like

This nostalgia is what really struck a chord with me in learning and hearing about the DeepSeek story, and how the team had to tinker/optimize across the stack and multiple layers to crank out as much efficiency out of limited/constrained resources.

On top of this, the team shared its results with the world through the open source MIT Model. This type of sharing is something we could only do in rudimentary ways back then: niche hobbyist publications e.g. Computer Shopper; Usenet; Tom's Hardware (when Tom just launched it).

Whether or not the world will proceed the same way remains to be seen. The Internet started out very open, [Marc Andreesen] of all people would remember the most, and over time became has become less open ... but is still impactful in so many ways.

DeepSeek v3 and R1 open source releases have similar vibes to Mosaic's Netscape browser release in 1994. It ushered in an entirely new era, a pivotal moment in the development of the Internet. That it's a Chinese company makes it all the more interesting of a plot twist.

So to me — because of the open source nature — this is perhaps less of a "Sputnik" moment and more of a Netscape 0.9 web browser release moment. How will the open vs. proprietary battle turn out this time around? And which countries will commercialize faster?

***

What does *collective* AI intelligence look like? Dwarkesh Patel writes, “Even people who expect human-level AI soon are still seriously underestimating how different the world will look when we have it. Most people are anchoring on how smart they expect individual models to be. (i.e. they’re asking themselves “What would the world be like if everyone had a very smart assistant who could work 24/7?”.) Everyone is sleeping on the collective advantages AIs will have, which have nothing to do with raw IQ but rather with the fact that they are digital—they can be copied, distilled, merged, scaled, and evolved in ways human simply can’t. What would a fully automated company look like - with all the workers, all the managers as AIs? I claim that such AI firms will grow, coordinate, improve, and be selected-for at unprecedented speed.

On a related theme, Azeem Azhae teases out what low-cost, real-time AI might look like:

When a system can process 250 tokens per second, the possibilities for real-time AI expand significantly. Customer service agents can respond almost instantly and latency can be low enough for seamless integration into gaming. More intriguing still is the prospect of multiple AIs thinking and conversing with one another at speeds beyond human comprehension. This could give rise to collaborative AI networks, the society of AI, filled with agents communicating with each other 50-100 times faster than humans do.

For instance, consider a network of a hundred high-end reasoning models operating in both collaborative and adversarial modes. They could tackle complex challenges—ranging from climate modeling to financial market simulations—by pooling diverse perspectives, cross-verifying each other’s results and iterating on solutions collectively. In adversarial mode, the models would identify weaknesses or blind spots in each other’s logic, driving continuous refinements and potentially giving rise to emergent intelligence. Such an approach might also lead to breakthroughs in content creation—generating personalised narratives, interactive storytelling or even new scientific insights—all at a speed and scale that no single model could match.

***

AGI still in 5 years-ish?: Tomas Pueyo frames DeepSeek’s advances as another milestone for the industry’s advance to artificial general intelligence (AGI):

“At this point, it doesn’t look like intelligence will be a barrier to AGI. Rather, it might be other factors like energy or computing power…Except DeepSeek just proved that we can make models that consume orders of magnitude less money, energy, and compute…So although electricity, data, and especially compute might be limiting factors to AI growth, we are constantly finding ways to make these models more efficient, eliminating these physical constraints…In other words: AI is progressing ever faster, we have no clear barriers to hinder their progress, and those in the know believe that means we’ll see AGI in half a decade.”

***

If you made it this far, thanks for reading and definitely share if anything tickled your fancy. Let me wrap up with one last angle on the DeepSeek story: I think AGI will have arrived when it creates something as funny as the video in this post.

Satpost is an efficient aggregator of links.

Satpost never disappoints but this was especially Informative and entertaining. Didn’t think I’d laugh that hard about AI